-

Gab, the controversial social network with a far-right following, has pulled its website offline after domain provider GoDaddy gave it 24 hours to move to another service. The move comes as other companies including PayPal, Medium, Stripe, and Joyent blocked Gab over the weekend. It had emerged that Robert Bowers, who allegedly shot and killed eleven people at a Pittsburgh synagogue on Saturday, had a history of posting anti-Semitic messages on Gab.

And it is only small beginning, in 2019 this guys will start blocking sites, services by thousands.

-

Basically if they're big enough (like Twitter or Facebook) then they'll be nearly untouchable, but if they're a little guy like Gab they can bully them and pull the rug under them :-/

-

Thing is - this guys started to do it coordinated. Now they train on something that is easy - far right sites. But very soon it'll change and they will move to anything they won't like.

-

WIkileaks thing shadow banned on Twitter

These accounts are locked @wikileaks @assangedefence @wltaskforce @assangelegal and cannot be accessed,” Hrafnsson recently tweeted.

“>They also seem to have been shadow banned. Should we be worried in these critical times?”

-

The Washington Post discovered that "more than half" of YouTube's top 20 search results for "RBG," the nickname for US Supreme Court Justice Ruth Bader Ginsburg, were known fake conspiracy theory videos. In fact, just one of the results came from a well-established news outlet. And if you played one of those videos, the recommendations quickly shifted to more extreme conspiracies.

The site addressed the skewed results shortly after the Post got in touch, promoting more authentic videos.

You'll be surprised with Youtube by the end of this year. We even can get special tools for companies so they can tell that videos about their product they like that that are fake conspiracy shit about real issues and hence must be excluded from search.

-

It's just a conspiracy theory that Sony cameras overheat. Someone's authentic LP-E6 battery is stuck in their BMPCC4K? Just another crackpot spreading lies. Those Nothrups are at it again posting made up stories with proof about losing their shots on an EOS R? Steer the viewer instead over to Mattias Burling who has had a wonderful experience with our I mean... Canon's camera.

-

Maybe they'll make the web so controlled and pointless that I can finally cut the cord and get a life

-

By this time cutting cord and getting life will be added to extremist activities. :-)

-

Roku - After the InfoWars channel became available, we heard from concerned parties and have determined that the channel should be removed from our platform. Deletion from the channel store and platform has begun and will be completed shortly.

Roku did not say that InfoWars had now broken any of its rules, as most of the other platforms that have removed the channel have done. It did not clarify whether the “concerned parties” were users, or advertisers who didn’t want their brands displayed next to the InfoWars channel.

See. Big progress. Now they hear from someone, no need to reference any rules anymore.

-

@Vitaliy_Kiselev Definitely - cord cutting probably will considered an extremist act of defiance. I've almost forgotten that Alex Jones exists at this point; deplatforming seems to be a successful tactic.

-

YouTube says it will stop recommending conspiracy videos.

Youtube now won't suggest "borderline" videos that come close to violating community guidelines or those which "misinform users in a harmful way."

Examples of the types of videos it will bury include 9/11 misinformation.

And this, guys, in crime even under capitalist laws.

An algorithm will decide which videos won't appear in recommendations, rather than people (though humans will help train the AI).

And this is done to avoid blame.

https://youtube.googleblog.com/2019/01/continuing-our-work-to-improve.html

-

WhatsApp is trying a number of measures to fight its fake news problem. The messaging service has revealed in a white paper that it's deleting 2 million accounts per month. And in many cases, users don't need to complain. About 95 percent of the offenders are deleted after WhatsApp spots "abnormal" activity

-

Seems like Yotube is preparing something big

AT&T has pulled all advertising from YouTube while the streaming service deals with issues regarding predatory comments being left on videos of children.

Disney, Nestlé, and Fortnite maker Epic Games have also pulled ads from YouTube this week.

Did this guys open any videos that are in "Trending"?

Predatory comments are really nothing compared to this piles of crap.

And this videos are showing top of ads.

-

Facebook is considering making it harder to find anti-vaccine content in its search results and excluding organizations pushing anti-vaccine messages from groups it recommends to users after a lawmaker suggested those kinds of steps, according to a person familiar with the company's possible response.

and

"We have strict policies that govern what videos we allow ads to appear on, and videos that promote anti-vaccination content are a violation of those policies. We enforce these policies vigorously, and if we find a video that violates them, we immediately take action and remove ads," reads an emailed statement from YouTube to BuzzFeed.

and conspiracies again

A spokesman for software company Grammarly said the company also took immediate action.

"Upon learning of this, we immediately contacted YouTube to pull our ads from appearing not only on this channel but also to ensure related content that promulgates conspiracy theories is completely excluded," they said, adding "We have stringent exclusion filters in place with YouTube that we believed would exclude such channels. We’ve asked YouTube to ensure this does not happen again."

Seems to be going faster and faster.

-

First they came for the communists, and I did not speak out - because I was not a communist;

Then they came for the socialists, and I did not speak out - because I was not a socialist;

Then they came for the trade unionists, and I did not speak out - because I was not a trade unionist;

Then they came for the Jews, and I did not speak out - because I was not a Jew;

Then they came for me - and there was no one left to speak out for me.Famous quote, and even the order will be same after they end up with all "fake news" and "conspiracy theories".

Even this year we will see tens of thousands of channels banning upon their content.

-

Zerohedge links were declared prohibited on facebook.

But

This was a mistake with our automation to detect spam and we worked to fix it yesterday." "We use a combination of human review and automation to enforce our policies around spam and in this case, our automation incorrectly blocked this link. As soon as we identified the issue, we worked quickly to fix it."

It was only short test :-) For now.

sa7396.jpg600 x 178 - 17K

sa7396.jpg600 x 178 - 17K -

WhatsApp appears to be working on a new feature to help users identify whether an image they receive is legitimate or not.

Read - this guys will recognize and store all info about images you send.

Drop WhatsApp fully and move to Telegram, nice place to read PV.

-

New Zealand authorities have reminded citizens that they face up to 10 years in prison for "knowingly" possessing a copy of the New Zealand mosque shooting video - and up to 14 years in prison for sharing it. Corporations (such as web hosts) face an additional $200,000 ($137,000 US) fine under the same law.

And now we clearly know why it had been staged by ruling class.

Slowly introducing prison for owning of wrong videos, already had been, but it is for anyone not just some bad guys.

Next they will be slowly shifting type of video to location where they wanted it to be - any anti capitalist and anti elite ones.

-

Seems like staged event had been used as major drills reason.

Internet providers in New Zealand aren't relying solely on companies like Facebook and YouTube to get rid of the Christchurch mass shooter's video. Major ISPs in the country, including Vodafone, Spark and Vocus, are working together to block access at the DNS level to websites that don't quickly respond to video takedown requests. The move quickly cut off access to multiple sites, including 4chan, 8chan (where the shooter was a member), LiveLeak and file transfer site Mega.

Yesterday, Facebook said it removed 1.5 million videos of the attack in the first 24 hours after the shooting.

While YouTube did not say precisely how many videos it ultimately removed, the company faced a similar flood of videos after the shooting, and moderators worked through the night to take down tens of thousands of videos with the footage, chief product officer Neal Mohan told the Post.

Not a random thing. Guys intentionally advertised video via all main media channels and after this tested new tech they have to prevent unnecessary info from spreading.

-

The Chairman of the House Committee on Homeland Security, Bennie Thompson, has sent letters to the CEOs of Facebook, Microsoft, Twitter and YouTube asking them to brief the committee on their responses to the video on March 27th. Thompson was concerned the footage was still "widely available" on the internet giants' platforms, and that they "must do better."

The Chairman noted that the companies formally created an organization to fight online terrorism in June 2017 and touted its success in purging ISIS and al-Qaeda content.

So, they removed content of organization they created, ouch.

And do not worry, under "online terrorism" they mean... you and your needs, just told clearly and openly.

-

Australia pledged Saturday to introduce new laws that could see social media executives jailed and tech giants fined billions for failing to remove extremist material from their platforms.

The tough new legislation will be brought to parliament next week as Canberra pushes for social media companies to prevent their platforms from being "weaponised" by terrorists in the wake of the Christchurch mosque attacks.

"Big social media companies have a responsibility to take every possible action to ensure their technology products are not exploited by murderous terrorists," Prime Minister Scott Morrison said in a statement.

Morrison, who met with a number of tech firms Tuesday—including Facebook, Twitter and Google—said Australia would encourage other G20 nations to hold social media firms to account.

Attorney-General Christian Porter said the new laws would make it a criminal offence for platforms not to "expeditiously" take down "abhorrent violent material" like terror attacks, murder or rape.

Executives could face up to three years in prison for failing to do so, he added, while social media platforms—whose annual revenues can stretch into the tens of billions—would face fines of up to ten percent of their annual turnover.

And now they make situation where they can say "we are forced to do this" :-)

-

Google has launched some new tools in a bid to fight misinformation about upcoming elections in Europe. A large part of that effort is focused on YouTube, where Google will launch publisher transparency labels in Europe, showing news sources which receive government or public funding. Those were unveiled in the US back in February, but had yet to arrive in the EU. "Our goal here is to equip you with more information to help you better understand the sources of news content that you choose to watch on YouTube," the company said.

YouTube will highlight sources like BBC News or FranceInfo in the Top News or Breaking News shelves in more European nations, making it easier for users to find verified news. Those features are already available in the EU in UK, France, Germany and other countries, but Google plans to bring them to other nations "in the coming weeks and months."

Pigs must consume only proper content :-)

-

Ruling class starting to become serious on Youtube

In the first three months of 2019, Google manually reviewed more than a million suspected "terrorist videos" on YouTube, Reuters reports. Of those reviewed, it deemed 90,000 violated its terrorism policy.

The company has more than 10,000 people working on content review and they spend hundreds of millions of dollars on this.

Seems like they start to realize dangers, hope it won't buy them much time.

-

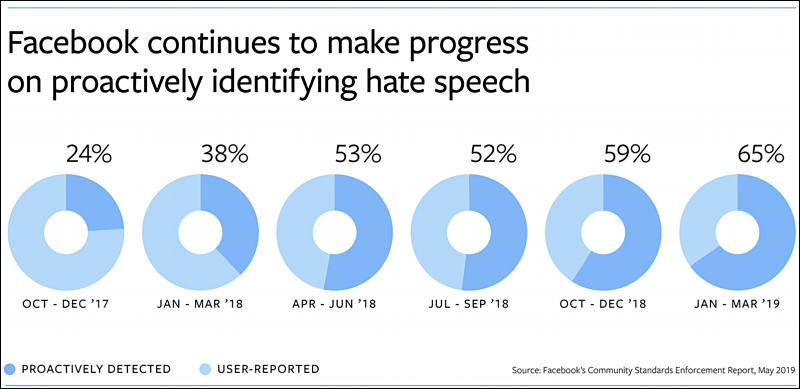

Facebook going big

Proactive Rate: Of the content we took action on, how much was detected by our systems before someone reported it to us. This metric typically reflects how effective AI is in a particular policy area.

In six of the policy areas we include in this report, we proactively detected over 95% of the content we took action on before needing someone to report it. For hate speech, we now detect 65% of the content we remove, up from 24% just over a year ago when we first shared our efforts. In the first quarter of 2019, we took down 4 million hate speech posts and we continue to invest in technology to expand our abilities to detect this content across different languages and regions.

https://newsroom.fb.com/news/2019/05/enforcing-our-community-standards-3/

-

Crossfit, the high-intensity gym program, released a statement this past Thursday slamming Facebook for the unexplained removal of its content as well the company's lack of self-responsibility as the "de facto authority over the public square.

That group advocates low-carb, high-fat diets -- something that not everybody in the health community agrees upon.

Wrong diet - ban! Nice progress.

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,993

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,368

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm102

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320