-

Virtually all the video I produce is in 4K and started as 4.6K RAW. I do a fair bit of 3D modelling and therefore many renders. Some scenes were taking up to 18 days to render out and so I hoped that the iMac Pro would deliver better performance than my previous system:

iMac5K (2017)

- 4 Ghz i7

- 32GB Memory

This presents as an 8 core system.

The new system:

Model Name: iMac Pro

- Model Identifier: iMacPro1,1

- Processor Name: Intel Xeon W

- Processor Speed: 2.3 GHz

- Number of Processors: 1

- Total Number of Cores: 18

- L2 Cache (per Core): 1 MB

- L3 Cache: 24.8 MB

- Memory: 128 GB

- Boot ROM Version: 15.16.6059.0.0,0

Presents as 18 cores and has significantly more memory at 128GB. I set out to do a couple of tests that I could measure and then sum up how the two systems feel.

Blender Render (Cycles)

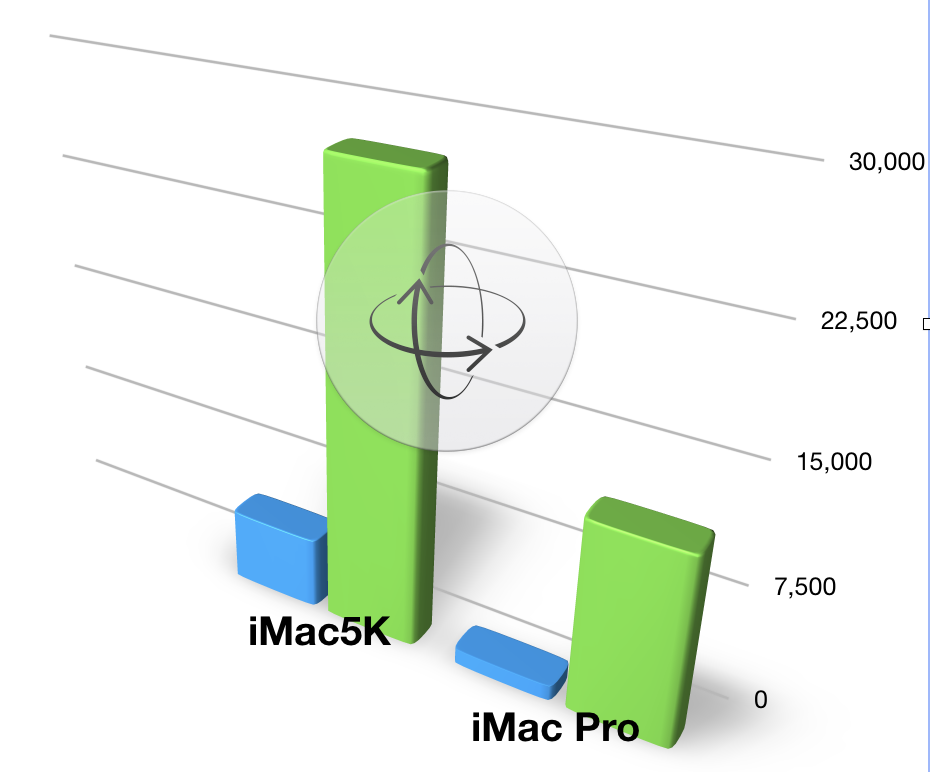

Old iMac 5K 1:16:41 H:M:S

IMac Pro 15:58 H:M:SCheetah 3D Render

Old iMac 5K 28167 S

iMac Pro 12082 SConclusions

So, Blender has a 5 times speed up and Cheetah3D has just over twice. I checked the activity monitor and both were using pretty much all the CPU.

The installation of the iMac Pro was painful, many painful. In the background the audio and Lacie RAID drivers were disabled. The trouble is that Lacie didn't write the drivers so it's not obvious which drivers should be enabled. I basically re-installed all the audio and RAID drivers and got to thinking that the Thunderbolt 3-2 adapters didn't work because the Lacie and Focusrite kit are Thunderbolt 1. Five hours of forum hunting later and all was running.

In truth I'm underwhelmed with the performance improvements compared to the three year difference and the eye-watering £10K price tag.

What is useful though is the ability to comfortably run other programs whilst Blender/Cheetah3D/Fusion/Resolve are thrashing through renders.

Of note is that the Radeon card supports the RadeonProRender plugin for blender, this can offload the render work to the graphics card. However you need to be aware that materials used by the Cycles render engine don't work with ProRender, and that the tool that converts between the two silently barfs on stuff like hair. I run the app from the terminal so that I can see the debug messages - this led me to changing enough materials to get the renders going.

It can even run FCPx and Blender (whilst rendering an animation). My guess is that this is a function of memory as well as the number of available cores.

So, perhaps the real joy of ownership is that the editing/grading interface is more responsive.

In the meantime, I've been looking at the cost of online render farms, which for me will work out at 3$ per frame -- so that's another ouch moment.

-

Shouldn't the 18-core Xeon-W in the iMac Pro present as 36 logical processors to the OS and not 18, similar to how the 4-core i7 presented as 8?

https://cgcookie.com/tutorial/setting-up-a-renderfarm

It looks like distributed rendering is an option with Blender and if space isn't at a premium for you, it would probably make more sense to return the iMac Pro and invest in a handful of systems with consumer-grade i5/i7 chips. You could put together 3 systems, each with an i7-8700k, 32GB of RAM, a small boot SSD, and a 1060 GPU for around $1,000 each (like $800 without the GPU if you can't use it) - and then you'd have 18 cores at 3.7 ghz, 96gb of RAM, and 3 pretty good GPU's in addition to your existing 4ghz iMac which you could continue using as your daily driver. I can also speak from experience with the 8700k and say that with a decent Noctua fan, it will pretty continuously turbo at 4.2ghz, even without overclocking or water cooling.

If space is a little tighter, you could consider a cluster of Skull Canyon NUC's (or Hades Canyon if you use a GPU plugin). For about $800, you can get a Skull Canyon with 16-32GB of RAM. It has an i7-6770HQ (4 cores, 2.6ghz). You'd get 5 of them for $4,000. It only has Iris graphics for the GPU, though. If you want a GPU, go to Hades Canyon, you're closer to $1200 per unit, but you also go up to i7-8809G which is 4 cores at 3.1 ghz and a Vega M.

Any of those options ends up with more raw horsepower than the iMac 5k and between 1/4 and 1/2 the cost. The main use case for something like a big many-core Xeon-W (workstation/server processor) is for heavy massively-parallel tasks that can't be split among multiple machines. If your workload can run across multiple systems, it's almost always cheaper overall to go with much cheaper desktop parts.

-

online render 3 dollars a frame? Wow. DId you by any chance do calculations with microsoft's batch? https://github.com/Azure/azure-batch-rendering/tree/master/plugins/blender

-

Hi, would you mind running official blender benchmark?: https://opendata.blender.org/

Your CPU should be quite good for rendering, but I use blender professionally and, in my experience, the best cost-effective and simple way of increasing its performance is having a multi gpu setup (as long as your scenes fit in your gpu memory). It allow to scale performance adding more cards and compared to personal render farms it increase you productivity as you can see your viewport rendered in almost real time and it decreases complexity and probably power consumption. Also, this setup fits quite well with Resolve requirements.

-

@Pedro_ES --- thanks for that Link -- and yes I will have a go when the two huge renders finish!

@eatstoomuchjam --- you are correct, on both counts!

@robertGL -- thanks for that Link.

-

As an update, I've managed to get Cycles to use the GPU, now 20 minute renders are down to 5 minutes, so that's a massive improvement over the older system.

Today I'm trying to get a crypto rig with 5 x 1070Ti's to run Blender on Ubuntu. Will report back.

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,992

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,367

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm101

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320