-

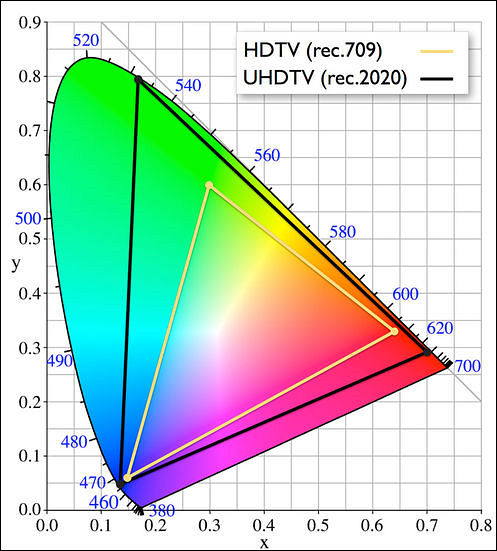

Expanding the color gamut of UHD was hot topic. Much as already been said about the gamut known as Rec.2020, which encompasses a significantly wider range of colors than HDTV's Rec.709 and even the digital-cinema P3 gamut. (Actually, color gamut is only one part of Rec.2020, which is more formally known as ITU-R Recommendation BT.2020 and also includes parameters such as display resolution, frame rate, color bit depth, and color subsampling.)

Some presenters advocated going much farther by using the XYZ color gamut, which extends well beyond the entire range of colors visible to the human eye. By using XYZ, the system would be entirely future-proof, accommodating any display technology that might be developed without having to create a new system all over again.

Frame rate was also debated. Of course, higher frame rates result in sharper motion detail, especially if the camera's shutter aperture—the fraction of the entire frame duration that the shutter is open—is low. (The longer the shutter is open during each frame, the blurrier moving objects appear.) However, a low shutter aperture also increases visible judder, a stuttering in what should be smooth motion. Also, higher frame rates look less like film and more like video, which many cinephiles object to. Still, many presenters said, in effect, "Get over it, this is the future we're talking about!"

Richard Salmon of the BBC brought some demo material comparing standard and high video frame rates—50 vs. 100 frames per second for Europe and 60 vs. 120 fps for the US. In the European clips, the 50 fps material was shot with a 50% shutter aperture, while the 100 fps footage was shot with a 33% aperture; in the American clips, both 60 and 120 fps were shot with a 50% aperture. In all but one case, the objects in motion were much sharper at the higher frame rate, and I did not see any judder. The only exception was a side shot of a woman juggling three bowling pins, and in that case, there was no improvement in the motion sharpness because, we were told, the human visual system cannot resolve rotating motion very well.

HDMI 2.0 was mentioned by Peter Putman, a well-known industry analyst and journalist whose presentation focused on the consumer-display side of the equation. According to his calculations based on using the RGB color space, HDMI 2.0 with 18 Gbps of available bandwidth can convey UHD at 60 fps with 8-bit color but not 10-bit, while DisplayPort 1.2 with 21.6 Gbps of bandwidth can handle 2160p/60 with 10-bit color using RGB coding.

Most of the presenters advocated for UHD to adopt the entire Rec.2020 suite of parameters, including a resolution of 3840x2160, the specified color gamut (if not XYZ), frame rates up to 120 fps, at least 10-bit color (preferably 12-bit), and 4:2:2 subsampling (if not 4:4:4). However, HDMI 2.0 at 18 Gbps can't accommodate all these upgrades, so we face a dilemma—increase HDMI 2.0's data rate, abandon HDMI for DisplayPort, or accept lower standards for UHD.

Check rest at http://www.avsforum.com/t/1496765/smpte-2013-uhd-symposium

-

This is what happens when you have too many cooks (aka. crooks) in the kitchen.

Same thing happened with the much delayed DVD rollout over copy protection concerns, and then finally people in an instant saw how good their EXISTING TV sets really looked with decent source material, and didn't need to upgrade their sets...

It's already a year after the first 4K 2160p consumer playback devices have been released from Sony and other manufacturers, yet "experts" on standards committees continue to navel gaze about 10bit 50-60fps when almost every "critic" hated the look of 48fps Hobbit!

We've already seen announcements of 12" UHD screens for tablets and laptops coming from Japan Display, have under $1000 50" 4K sets thanks only to Chinese manufacturers and already have 3200 x 1800 13" laptop screens out.

Who really doesn't want consumers to have affordable 10 bit color and 2160p TV sets? GPU manufacturers and TV manufacturers who want to drain people for every last dime to buy a workstation class card or overpriced 65-85" set, just to name a few.

Right now? 10 bit 4:2:2 at 24fps, 25fps and 30fps could be an ENORMOUS IMPROVEMENT right now for the next 6 years broadcasting legacy and current/future material over the existing infrastructure for 1080p TV sets (Rec. 2020 needs to be re-written to accommodate 1080p ) and 2160p TV sets using HDMI 1.4 and HDMI 2.0, until 8K UHDTV (4320p) at 120fps takes over in the year 2020 obsoleting all the existing equipment anyway.

Not right now, but in the future? 60 fps or more fps at 10bit, 3D and 12 bit, something better than HEVC, new connectors and wireless, etc ... I'd say leave those features for each manufacturer to differentiate themselves from each other for the next standard by 2020. PC Gaming, sports, VR, and computer-based content creation are where there are likely to be many iterations of what's acceptable there over the next 6 years, so why waste any breathe on it now?

Compared to the horrific 8 bit 4:2:0 garbage and youtube compressed material often not even anywhere near 1080p that's broadcast everywhere around the globe, these "experts" need to spend more time actually creating and selling something revolutionary instead of criticizing today's readily available tech which everyone could benefit from for the rest of the decade...

just my 2 cents, typed on a CRT computer monitor...

-

just my 2 cents, typed on a CRT computer monitor...

LOL.

In modern world mostly corporations make standards, not governments .

Right now they know that 10 or 12bit and 4:2:2 will make big problems with satellite transmissions.

Wide color gamuts will make problems with both set manufacturers and with camera makers. Plus the wider is color gamut - the bigger is set consumption (I mean here LCD panels).

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,990

- Blog5,725

- General and News1,353

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,367

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm101

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,319