It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

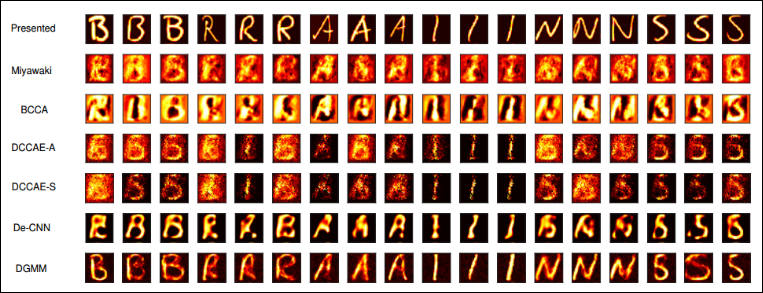

Decoding human brain activities via functional magnetic resonance imaging (fMRI) has gained increasing attention in recent years. While encouraging results have been reported in brain states classification tasks, reconstructing the details of human visual experience still remains difficult. Two main challenges that hinder the development of effective models are the perplexing fMRI measurement noise and the high dimensionality of limited data instances. Existing methods generally suffer from one or both of these issues and yield dissatisfactory results. In this paper, we tackle this problem by casting the reconstruction of visual stimulus as the Bayesian inference of missing view in a multiview latent variable model. Sharing a common latent representation, our joint generative model of external stimulus and brain response is not only "deep" in extracting nonlinear features from visual images, but also powerful in capturing correlations among voxel activities of fMRI recordings. The nonlinearity and deep structure endow our model with strong representation ability, while the correlations of voxel activities are critical for suppressing noise and improving prediction. We devise an efficient variational Bayesian method to infer the latent variables and the model parameters. To further improve the reconstruction accuracy, the latent representations of testing instances are enforced to be close to that of their neighbors from the training set via posterior regularization. Experiments on three fMRI recording datasets demonstrate that our approach can more accurately reconstruct visual stimuli.

sample132.jpg763 x 293 - 91K

sample132.jpg763 x 293 - 91K

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,993

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,368

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm102

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320