It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

@willifan: I can imagine that ffmpeg makes a false assumption about the usage of 16-235 values in the input, as it seems to be a common thing for yuv420 media files.

But if you use "in_rage=full:out_range=full", that should be fine with material recorded in the 0-255 value range. I don't know whether ProRes is in any regard expecting levels to be not using the full scale. You could try to use "in_range=full:out_range=mpeg" and see if that helps.

On the timecode issue: Can you relay us what ffmpeg (or ffprobe) tells about that "data stream"? Also, I see that you kept "-map 0" out of your above scripts - but it might be that ffmpeg does not map data stream into the output by default. Please try with "-map 0" before the output file name.

If the ffmpeg output contains information on what stream those timecodes are, you could also use an explicit mapping, like "-map "0:2" to map the third stream of the first into the output...

(And btw.: I do not have an orginal GH4 .MTS file to test with. Panasonic missed their chance to sell me one before my last field trip, so now I wait until more than one dealer has the GH4 on stock, which is usually the time when prices fall.)

-

I can imagine that ffmpeg makes a false assumption about the usage of 16-235 values in the input, as it seems to be a common thing for yuv420 media files.

Both Canon and Nikon DSLR's record video in full range, 0-255 color space. And according to After Effects, GH3 and earlier Lumix cameras use full range as well. Do you know if ffmpeg reads the Full Range Flag in the file's metadata?

-

@LPowell: Yes, ffmpeg does read the "full range flag", at least since this change from January 2010.

But if you search for it on the WWW, you'll find plenty of reports where people complain that one or the other input file conveys wrong information in this flag - especially digital cameras. A very comprehensive description of the issue and experiments with NLE software are described here.

-

@karl: about timecode issue, i tried with -map 0 and also the explicit mapping -map 0:2. This work ONLY if I don't use -filter_complex. Using filter_complex I have the error: "data stream encoding not supported yet (only streamcopy)". I think that there are some bug in ffmpeg using filter_complex and data streamcopy (audio stream is copied correctly).

About the full range, I will continue test. But with the script I published some post ago I have a perfect results (about color and luminosity), but I noticed that in the output file data over 235 (using 16-235 setting) are clipped. I must try to fix the problem also with 0-255 setting, and 16-235 setting.

Beside, the problem with 0-255 setting raise only using "-filter_complex". In the normal use ffmpeg read correctly the flag, and conversion is perfect. I found all this issues only using "-filter_complex".I uploaded on a my shared google drive folder a short GH4 clip (16-235 setting), plese us it for test: https://drive.google.com/file/d/0B6ZaEMvcQLRxUjZ6cDcwWFhpN1k/edit?usp=sharing

-

@karl - From the linked article:

"I’d hazard a guess that even 32bit precision RGB processing would still start from what the codec provides and if the first thing the codec does after decompression is squeeze luma the damage is already done."

Sounds like the author doesn't understand the nature of 32-bit floating point RGB color space. The first thing an H.264 decoder does is decompress the file into 8-bit YUV format, whose luma scale is always 16-235. The next thing done is to convert the YUV components into RGB color space, scaled according to how the Full Range Flag is set. When this is done in 32-bit floating point mode, there is more than enough precision available to avoid perceptible image degradation, regardless of whether the data is scaled as Full Range or Studio Swing.

-

@willifan: I downloaded your sample and converted it to 2k.

The timecode data track is just copied with "-c:d copy -map 0", and when looking at the output file with "ffprobe output.mov" it is there again:

Metadata: major_brand : qt minor_version : 512 compatible_brands: qt encoder : Lavf55.33.100 Duration: 00:57:20.64, start: 0.000000, bitrate: 570 kb/s Stream #0:0(eng): Video: prores (ap4h / 0x68347061), yuv444p10le, 1920x1080, 290448 kb/s, 25 fps, 25 tbr, 12800 tbn, 12800 tbc (default) Metadata: handler_name : DataHandler timecode : 14:27:44:16 Stream #0:1(eng): Audio: pcm_s16be (twos / 0x736F7774), 48000 Hz, stereo, s16, 1536 kb/s (default) Metadata: handler_name : DataHandler Stream #0:2(eng): Data: none (tmcd / 0x64636D74), 0 kb/s (default) Metadata: handler_name : DataHandler timecode : 14:27:44:16I did notice two things, though:

Your input file seems to have a timecode stored also in the PCM audio track, which is not carried over to the output file audio track. I did some experiments with "-timecode ...", but it seems that ffmpeg just never writes timecodes into audio tracks, only in video and data tracks in .mov containers. Maybe you can ask on the ffmpeg-users mailing list if there's somebody who knows the particularities of timecodes in audio tracks better than I do.

Another thing I noticed is that the input file contains a few timecodes slightly below zero (-0.120s), but I would not expect this to make any relevant difference.

-

@karl: you are right, using "-c:d copy -map 0" it work.;)

Really sorry, I tried a lot of combination but not this one... i think that the timecode in the audio track is not important. The file prores now work in Premiere and I can see the timecode.

Now I want fix the clipping problem over 235 - you can see what I mean comparing the original with converted with some video waveform monitor. In my example there are some "spike" over 235 ultra white, clipped on the converted file. Difference is negligible anyway.

Many thanks -

What about using ffmbc? I read over there that is better handling the correct input/outputs and handling these conversions.

-

@heradicattor: The last ffmbc release is from March 2013. And I do not see activity in its source repository. I would be suprised if anything really useful that was once only in ffmbc wasn't backported into the upstream ffmpeg project.

-

I experimented a different approach, "evolution" of the first karl script. Instead of scaling to 4K color components u and v, then rescale to 2K all together, I did exactly as in the first karl script, but adding an "scale" operation for f u and v which in reality does nothing but solves the problem of wrong merge , probably because the "scale" copy plans u and v in an array 32-bit, homogeneous with the floor Y.

The script is slightly faster, and I think in this way there is less risk that the color components are modified in some way. The script is the follow:

"C:\Program Files\ffmpeg\bin\ffmpeg.exe" -i "input.MOV" -filter_complex "extractplanes=y+u+v[y][u][v]; [y] scale=w=iw/2:h=1080:in_range=full:flags=print_info+bicubic+bitexact [ys]; [u] scale=w=iw1:h=1080:in_range=full:flags=print_info+neighbor+bitexact [us];[v] scale=w=iw1:h=1080:in_range=full:flags=print_info+neighbor+bitexact [vs]; [ys][us][vs]mergeplanes=0x001020:yuv444p,format=pix_fmts=yuv444p10le" -sws_dither none -q 0 -quant_mat hq -c:v prores_ks -profile:v 4 -c:a copy -c:s copy -c:d copy -map 0 "E:\temp\output.mov"

or,

ffmpeg -i "$1" \ -filter_complex 'extractplanes=y+u+v[y][u][v]; [y] scale=w=iw/2:h=1080:in_range=full:flags=print_info+bicubic+bitexact [ys]; [u] scale=w=iw1:h=1080:in_range=full:flags=print_info+neighbor+bitexact [us]; [v] scale=w=iw1:h=1080:in_range=full:flags=print_info+neighbor+bitexact [vs]; [ys][us][vs]mergeplanes=0x001020:yuv444p,format=pix_fmts=yuv444p10le' \ -sws_dither none \ -q 0 -quant_mat hq \ -c:v prores_ks -profile:v 4 \ -c:a copy \ -c:s copy \ -c:d copy \ -map 0 \ "$1_2k_ProRes4444.mov"

any feedback is welcome. -

Have you guys tried the Thomas Worth technique? http://www.eoshd.com/comments/topic/5426-mac-app-to-resample-gh4-8-bit-420-to-10-bit-444/ the luma channel becomes a real 10bit here's 2 frames to compare I simply cranked the mids...look at the sky Any chances we can get as good with ffmpeg?

ffmeg.jpg1920 x 1080 - 835K

ffmeg.jpg1920 x 1080 - 835K

thomas.jpg1920 x 1080 - 885K

thomas.jpg1920 x 1080 - 885K -

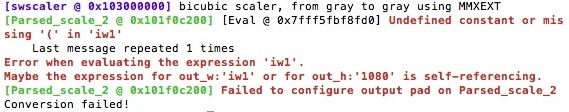

@schprox: Your error output above looks confusing... I cannot see any "iw1" in the command line that is referred to in the error message.

Nevertheless, the script you tried above does not yield you a 10bit luma channel, even if it worked. Try this one:

ffmpeg -i "input.mp4" -filter_complex 'extractplanes=y+u+v[y][u][v]; [u] scale=w=3840:h=2160:flags=print_info+neighbor+bitexact [us]; [v] scale=w=3840:h=2160:flags=print_info+neighbor+bitexact [vs]; [y][us][vs]mergeplanes=0x001020:yuv444p,format=pix_fmts=yuv444p10le,scale=w=1920:h=1080:flags=print_info+bicubic+full_chroma_inp+full_chroma_int' -sws_dither none -q 0 -quant_mat hq -c:v prores_ks -profile:v 4 -c:a copy -c:s copy -c:d copy -map 0 "output.mov"

-

@schprox: As you seem to be using a Windows shell, try replacing the ' (single quote) characters in my script with " (double quotes). I'm not a Windows user or expert, but user willyfan mentioned this would adapt the syntax of my "bash" script for use with Windows earlier in this thread.

-

This is a topic dedicated solely for any kind of progress updates on software, code and methods to downscale 4k with best possible results to help people establish a workflow for converting 4k footage.

...looks like the topic is all inclusive....but many people will prefer off the shelf solutions in contrast to writing code !

-

@schprox: I think the differences you are seeing are not actually caused by ffmpeg and Thomas' software doing anything different with regards to averaging each 4 adjacent luma pixels - if that was the case, we should expect to see most differences in mid-tones, not in the highlights of the image.

I think what you see is the difference of using (logarithmic) DPX as an output format versus using ProRes.

If you want more comparable results, instruct ffmpeg to also output to individual DPX images:

ffmpeg -i "input.mp4" -filter_complex "extractplanes=y+u+v[y][u][v]; [u] scale=w=3840:h=2160:flags=print_info+neighbor+bitexact [us]; [v] scale=w=3840:h=2160:flags=print_info+neighbor+bitexact [vs]; [y][us][vs]mergeplanes=0x001020:yuv444p,format=pix_fmts=yuv444p10le,scale=w=1920:h=1080:flags=print_info+bicubic+full_chroma_inp+full_chroma_int" -sws_dither none -q 0 -map 0 "output%05d.dpx"

(Notice the pattern "%05d" in the output name is relevant as ffmpeg will need to write one output image file per frame.)

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,992

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,367

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm101

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320

Tags in Topic

- 4k 133

- workflow 21

- development 10

- downscaling 2