-

May be it is stupid and can't play a little, like artist will do?

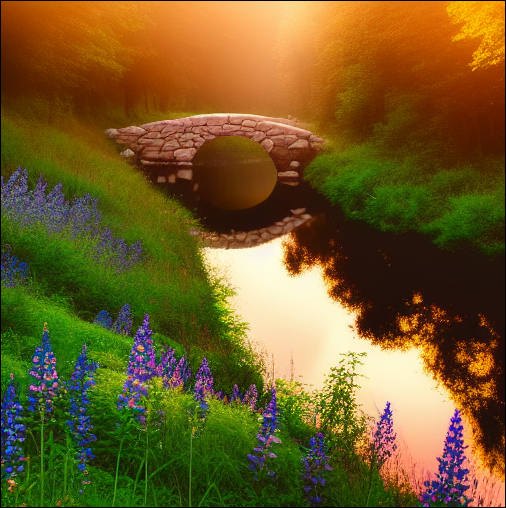

sa7258.jpg509 x 506 - 44K

sa7258.jpg509 x 506 - 44K

sa7259.jpg507 x 504 - 49K

sa7259.jpg507 x 504 - 49K

sa7260.jpg507 x 510 - 64K

sa7260.jpg507 x 510 - 64K -

How about extremely complex scene - look at sunset snowy and misty forest through frosty window

sa7266.jpg498 x 494 - 71K

sa7266.jpg498 x 494 - 71K

sa7267.jpg505 x 505 - 63K

sa7267.jpg505 x 505 - 63K -

Beautiful

-

I've been playing with SD quite a bit, and have to say it's probably one of the best tools for mood boards, I'll explain why.

Due to the data dragnet style of training, it tends to generate imagery that is stereotypical. So it kinda forces you to be creative with prompts, and to think about what image you really want, and what constitutes that image.

"Person sitting on chair" quickly turns into: "middle aged man sitting on an old wooden chair, in a small room with wall paper, lit through an open window."

Then you can get an infinite amount of permutations of this. Just brilliant for mood board generation!

Personally I don't think it's a competition to the human neural network. Not because it's not good enough, but because people tend to enjoy filming. It's a tool that we can use, just like a camera is a tool.

Then again, when SD gets video, I wouldn't mind using it for b-roll. I don't really enjoy filming branches swaying all that much.

Then again, "middle aged man" turns out to be "white middle aged man with a belly".

middle_aged_man_sitting_on_an_old_wooden_chair__in_a_small_room_with_wall_paper__lit_through_an_open_Seed-88416_Steps-50_Guidance-7.5.png512 x 512 - 489K

middle_aged_man_sitting_on_an_old_wooden_chair__in_a_small_room_with_wall_paper__lit_through_an_open_Seed-88416_Steps-50_Guidance-7.5.png512 x 512 - 489K

0.jpg512 x 512 - 14K

0.jpg512 x 512 - 14K -

1524394608_pencil_drawing_colorful_art_female_with_red_fully_transparent_underwear_and_large_straw_hat__looking_away_the_right_hand_touches_itself_in_an_intimate_place__road_going_till_horizon__yellow_sunflowers_along_the.png512 x 512 - 486K

1524394608_pencil_drawing_colorful_art_female_with_red_fully_transparent_underwear_and_large_straw_hat__looking_away_the_right_hand_touches_itself_in_an_intimate_place__road_going_till_horizon__yellow_sunflowers_along_the.png512 x 512 - 486K

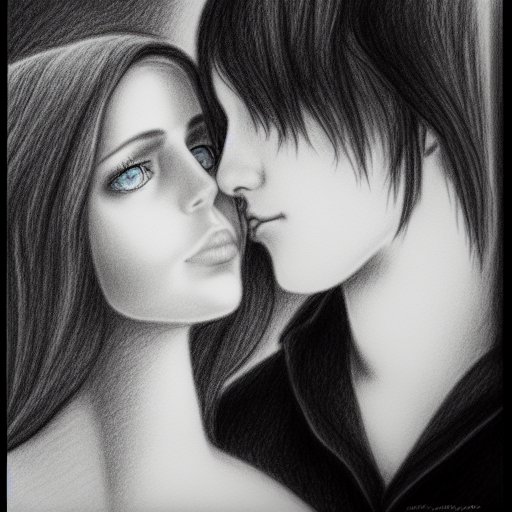

1711508014_pencil_drawing_art_black_and_white__tender_loving_female_with_very_big_blue_eyes_caressing_male_neck.png512 x 512 - 465K

1711508014_pencil_drawing_art_black_and_white__tender_loving_female_with_very_big_blue_eyes_caressing_male_neck.png512 x 512 - 465K

2692316513_pencil_drawing_art_black_and_white__tender_loving_female_with_very_big_blue_eyes_caressing_male_neck.png512 x 512 - 472K

2692316513_pencil_drawing_art_black_and_white__tender_loving_female_with_very_big_blue_eyes_caressing_male_neck.png512 x 512 - 472K

238565697_beautiful_female_with_red_transparent_dress_and_large_straw_hat__long_hair__looking_away__paved_road_going_till_horizon__yellow_sunflowers_along_the_road__green_fields_to_the_right_and_left_side__sunset_with_sun.png512 x 512 - 427K

238565697_beautiful_female_with_red_transparent_dress_and_large_straw_hat__long_hair__looking_away__paved_road_going_till_horizon__yellow_sunflowers_along_the_road__green_fields_to_the_right_and_left_side__sunset_with_sun.png512 x 512 - 427K

1080427678_pencil_drawing_colorful_art_female_with_red_fully_transparent_dress_and_large_straw_hat__looking_away__right_hand_lifts_the_dress__road_going_till_horizon__yellow_sunflowers_along_the_road__green_fields_to_the_.png512 x 512 - 425K

1080427678_pencil_drawing_colorful_art_female_with_red_fully_transparent_dress_and_large_straw_hat__looking_away__right_hand_lifts_the_dress__road_going_till_horizon__yellow_sunflowers_along_the_road__green_fields_to_the_.png512 x 512 - 425K -

It is not about prompts only.

I am using some initial seed image, sometimes specially modified, on each iteration you select one of 9 varients, change how much it can change overall image, change prompt, also paint some areas to change more, and such can be 10, 15, 20 iterations.

It is an art tool.

Note how I am able to make dress transparent.

Or how I can add innuendo, make you feel that girl is showing her private part to the sunflower. It was intentional, and source image had been same as both other reg dress girl. Even her clothes are being set not only buy prompts, but iterated and also painted and changed.

-

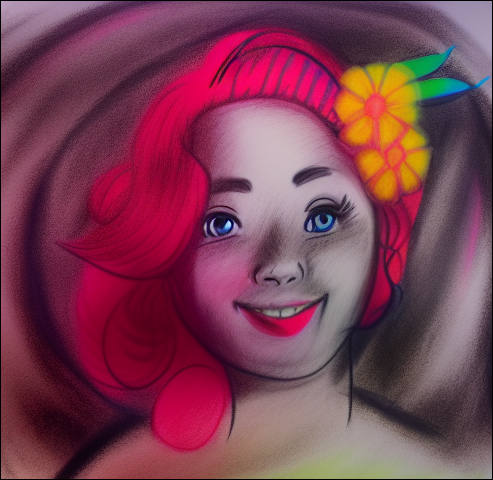

Another thing that appeared last week:

Made form this (many iterations in SD)

780164983_pen_drawing_black_and_white__art__beautiful_smiling_female_with_deep_bright_blue_large_eyes__long_eyelashes_with_mascara___red_nice_long_slightly_curly_hair__bright_red_lips.png512 x 512 - 434K

780164983_pen_drawing_black_and_white__art__beautiful_smiling_female_with_deep_bright_blue_large_eyes__long_eyelashes_with_mascara___red_nice_long_slightly_curly_hair__bright_red_lips.png512 x 512 - 434K -

Slightly more of my experiments with art made using Stable Diffusion.

It used specially processed initial photo.

sa7284.jpg504 x 503 - 48K

sa7284.jpg504 x 503 - 48K

sa7283.jpg481 x 505 - 40K

sa7283.jpg481 x 505 - 40K

sa7286.jpg501 x 475 - 39K

sa7286.jpg501 x 475 - 39K

sa7285.jpg493 x 480 - 37K

sa7285.jpg493 x 480 - 37K -

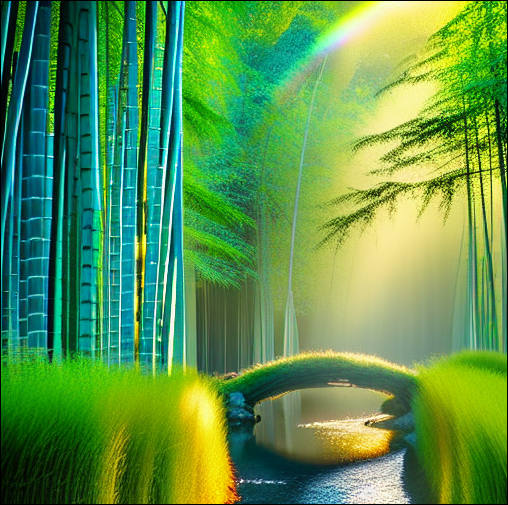

Now, let's try to play with depth of focus and precise lighting, while keeping exact flowers specs we need

sa7287.jpg509 x 505 - 57K

sa7287.jpg509 x 505 - 57K

sa7288.jpg505 x 506 - 42K

sa7288.jpg505 x 506 - 42K

sa7289.jpg509 x 505 - 65K

sa7289.jpg509 x 505 - 65K -

@Vitaliy_Kiselev sorry, I didn't mean to say that the AI's have no value, or that they are not creative in their own right.

Actually, quite the opposite.

I see them as being an addition to photography, digital art, painting etc.

AI art is its own thing, and quite different to photography, et al. Firstly, as you have shown it takes a completely different skill set, one of describing, keywords, seed imagery etc.

I think as opposed to 'we don't need photographers anymore' possibly it is more like: 'we don't need stock anymore'. Shutterstock & Adobe must be worried big time.

Regardless, it's it's own thing, and AI for AI's sake is a valid artform. One that should be explored outside of "AI makes photographers redundant".

Have a look at this if you haven't already:

The big take away, is if it was perfect, like shot on camera, it would loose the important painterly, glitchy aesthetic. Sometimes, its the imperfections that make it better.

-

It can be almost perfect, it can be some style.

This thing integrates different things and you can mix them.

You own mind is functioning similar to it - it gets small focused spot image and previous image and makes estimate based on your own knowledge.

-

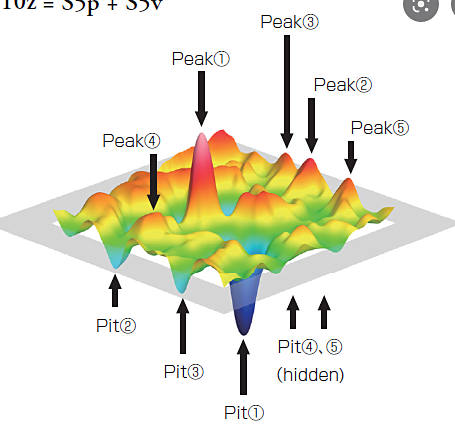

Mathematically it looks like this

Where peaks are visually pleasing images (as it thinks of them). As people trained NN to provide them that they want (most probably using back propagation learning).

sa7292.jpg455 x 432 - 29K

sa7292.jpg455 x 432 - 29K -

sa7293.jpg511 x 505 - 43K

sa7293.jpg511 x 505 - 43K

sa7294.jpg507 x 505 - 53K

sa7294.jpg507 x 505 - 53K

sa7295.jpg506 x 502 - 42K

sa7295.jpg506 x 502 - 42K

sa7296.jpg508 x 498 - 31K

sa7296.jpg508 x 498 - 31K

sa7297.jpg509 x 502 - 38K

sa7297.jpg509 x 502 - 38K -

sa7298.jpg503 x 508 - 34K

sa7298.jpg503 x 508 - 34K

sa7299.jpg500 x 500 - 38K

sa7299.jpg500 x 500 - 38K

sa7300.jpg503 x 497 - 54K

sa7300.jpg503 x 497 - 54K -

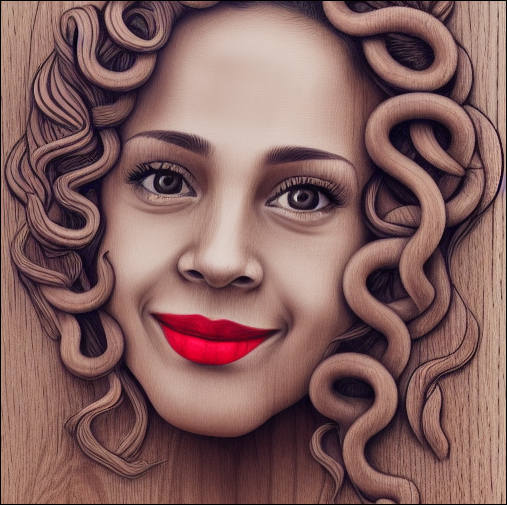

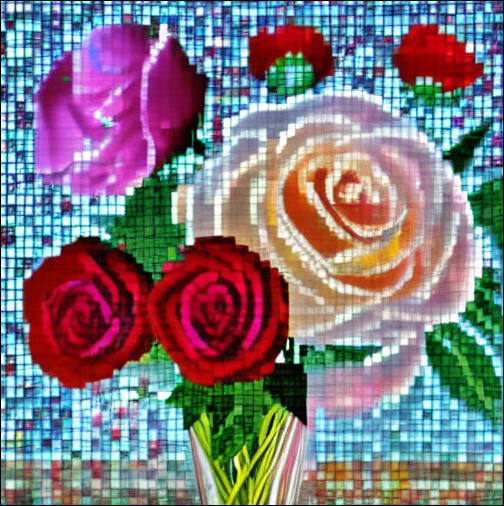

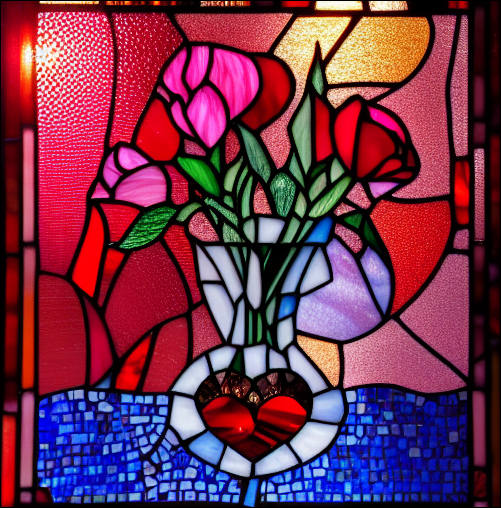

Experiments with mosaic and materials

sa7310.jpg508 x 507 - 85K

sa7310.jpg508 x 507 - 85K

sa7309.jpg504 x 506 - 86K

sa7309.jpg504 x 506 - 86K

sa7308.jpg501 x 508 - 89K

sa7308.jpg501 x 508 - 89K

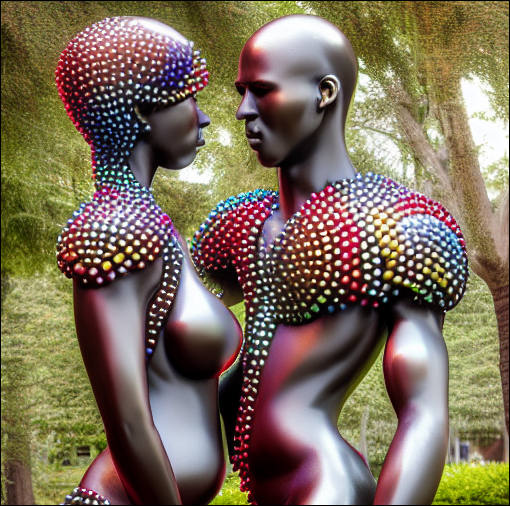

sa7307.jpg505 x 502 - 61K

sa7307.jpg505 x 502 - 61K

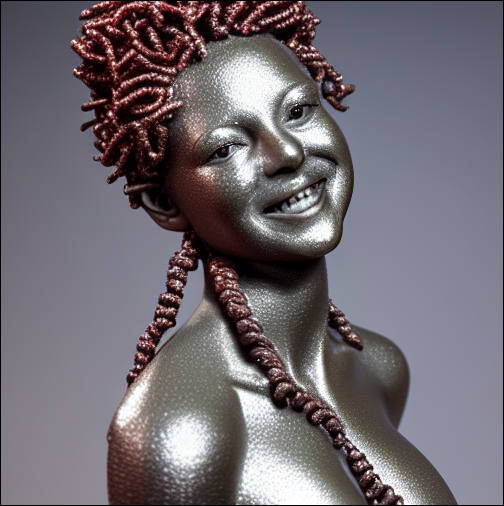

sa7306.jpg509 x 506 - 113K

sa7306.jpg509 x 506 - 113K

sa7305.jpg510 x 505 - 80K

sa7305.jpg510 x 505 - 80K

sa7304.jpg511 x 509 - 95K

sa7304.jpg511 x 509 - 95K

sa7303.jpg504 x 503 - 44K

sa7303.jpg504 x 503 - 44K -

Metals and colored neodymium magnet balls

-

On lotus flowers

sa7326.jpg509 x 506 - 65K

sa7326.jpg509 x 506 - 65K

sa7321.jpg507 x 503 - 51K

sa7321.jpg507 x 503 - 51K

sa7325.jpg508 x 505 - 62K

sa7325.jpg508 x 505 - 62K

sa7317.jpg506 x 506 - 41K

sa7317.jpg506 x 506 - 41K

sa7319.jpg509 x 506 - 53K

sa7319.jpg509 x 506 - 53K

sa7324.jpg507 x 507 - 70K

sa7324.jpg507 x 507 - 70K

sa7318.jpg502 x 503 - 49K

sa7318.jpg502 x 503 - 49K

sa7323.jpg502 x 504 - 61K

sa7323.jpg502 x 504 - 61K

sa7320.jpg505 x 504 - 59K

sa7320.jpg505 x 504 - 59K -

@Vitaliy Wow! How much RAM do you need for your cool experiments?

-

I use hosted thing.

But better you need 11GB or better VRAM and some high speed GPU with fast single precision CUDA.

So, 3080, 3090, 3090 Ti.

Or new 4090.

note that Stable Diffusion v1.5 is still not public for download and local use (and this is that is used here)/

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,993

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,368

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm102

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320