-

Samsung's custom CPU core arm of the company is reportedly shutting down. The research and development facility which housed around 290 employees will cease to work on future projects, according to a WARN letter filed with the Texas Workforce Commission. For the longest time, Samsung’s Exynos range of chipsets continued to attain the second position when pitted against Qualcomm’s Snapdragon family in performance benchmarks. For the foreseeable future, Samsung is expected to rely on ARM’s cores for performance-related tasks. According to the latest report, the layoffs will be effective as of December 31 and will be considered a permanent decision.

It’s possible Samsung didn’t want to cut ties with its custom CPU core department, having invested an estimated $17 billion in its Austin campus over the years. Unfortunately, considering the number of roadblocks the company had in its path, the best possible decision was to let it go.

This is interesting, and we can see both extreme costs of development for 5nm and further processes and Samsung can also want to go out of US control over chips development. So, it is possible that they already have secret development facility that is just waiting for next stage of trade wars.

-

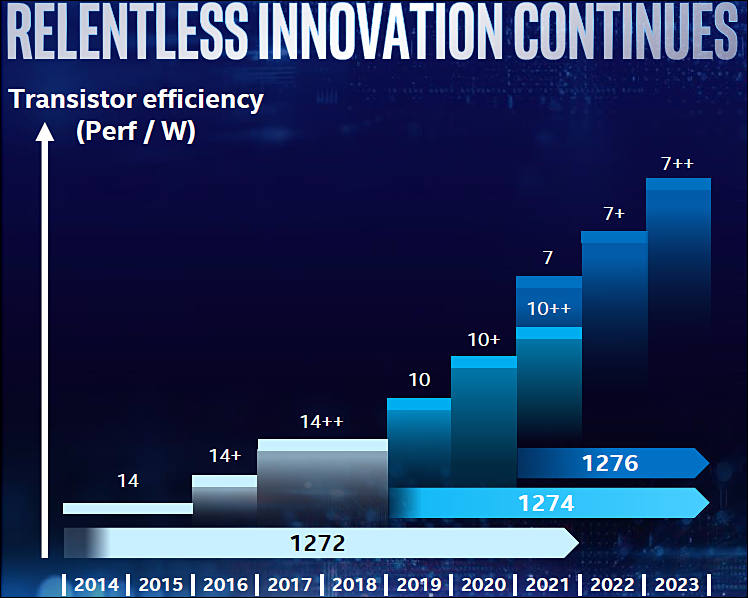

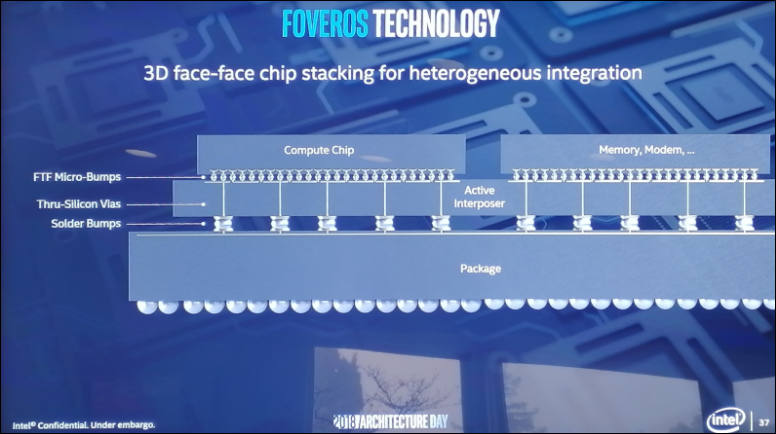

Complex Intel chip plans.

It is just one issue - such complex mounting of one chip above another is very fragile, can have issues even due fast temperature changes. End for notebooks it is even worse as any significant drop can mean motherboard replacement (another thing can be that such chips can be very hard to mount or replace!).

sa11109.jpg776 x 434 - 50K

sa11109.jpg776 x 434 - 50K -

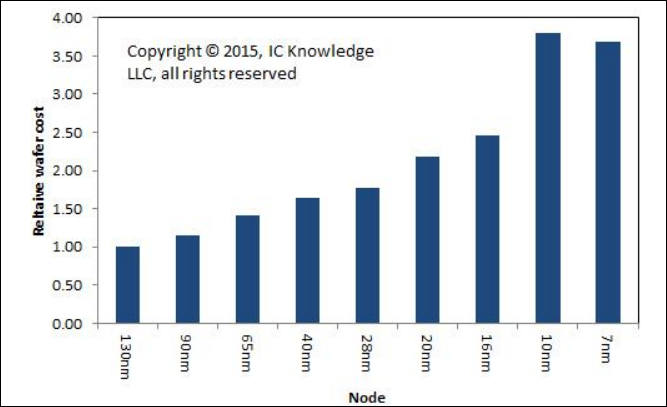

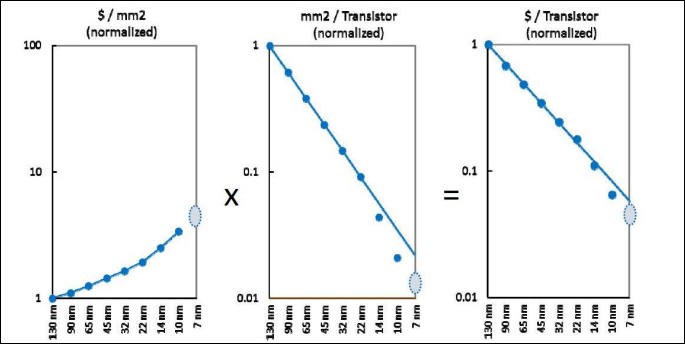

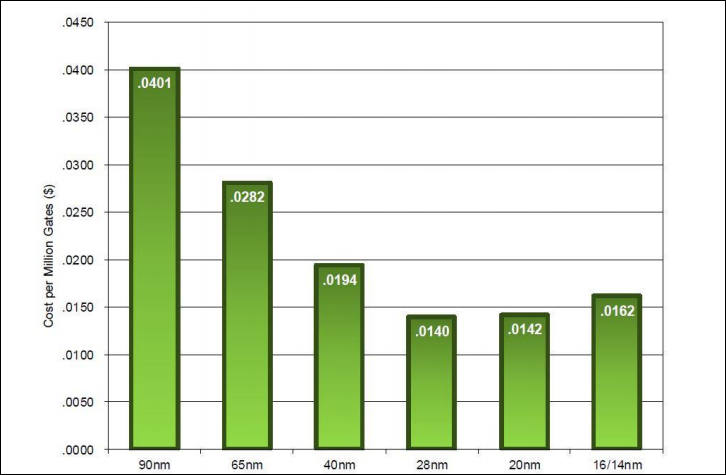

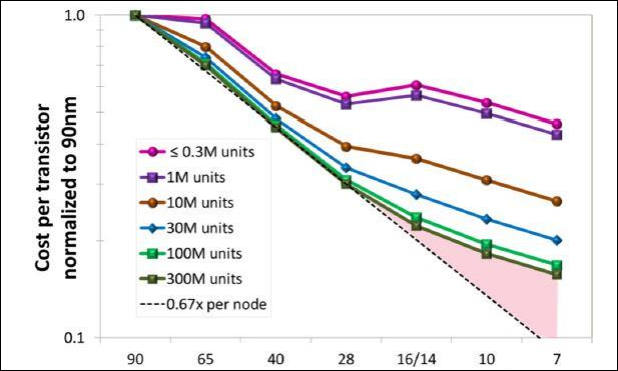

Some older costs charts

sa11176.jpg667 x 407 - 34K

sa11176.jpg667 x 407 - 34K

sa11177.jpg685 x 344 - 31K

sa11177.jpg685 x 344 - 31K

sa11178.jpg726 x 475 - 40K

sa11178.jpg726 x 475 - 40K

sa11179.jpg618 x 371 - 36K

sa11179.jpg618 x 371 - 36K -

There is a massive layoff and reorganization coming to a silicon valley chip company in the near future. SemiAccurate has heard the plans from multiple sources, enough to say with confidence that this is a big one, and several senior people are already sending out resume’s.

https://semiaccurate.com/2019/11/20/large-layoffs-and-reorg-to-hit-silicon-valley-soon/

Usually this guy is spot on.

-

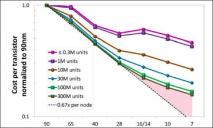

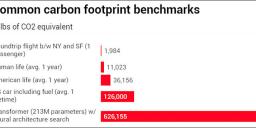

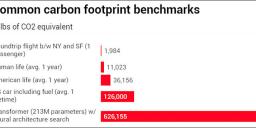

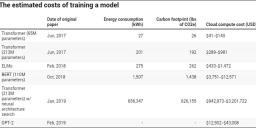

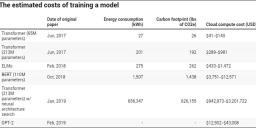

Issues with modern neural networks training

It is big reason why suddenly all main firms started to make "AI accelerators", all other ways no longer work good. And complexity constantly rises.

sa11182.jpg800 x 357 - 39K

sa11182.jpg800 x 357 - 39K

sa11183.jpg800 x 389 - 39K

sa11183.jpg800 x 389 - 39K -

From interview with UMC, one of the players who dropped from the race

UMC, once a major rival to TSMC in pursuing advanced manufacturing nodes, decided about two years ago to shift its focus away from joining the race to 10nm and more advanced process technologies. UMC disclosed previously plans to enhance its 14nm and 12nm process offerings but to suspend sub-12nm process R&D.

UMC encountered some bottlenecks in the 0.13-micron process race. It was a vicious circle in which we lost market share in the advanced node market segment and saw impacts on our revenues, coupled with a decline in our available R&D capital. Such experience pushed UMC to rethink its strategy to avoid being trapped again in a rat race.

In 2017, UMC recognized its role could make a difference. Rather than fighting to be a technology leader in the advanced-node process segment, UMC can be more capable of being a leader in the more mature process segments.

-

TSMC continues to see its 7nm manufacturing processes run at full utilization, according to sources at fabless chipmakers who said new orders placed recently cannot be fulfilled at least until the middle of next year

Something is up with 7nm, as AMD and other players also can't get enough chips and are getting much less compared to that they can sell. And it is already after AMD specially delayed release and moved Threadripper even further and jacked prices.

-

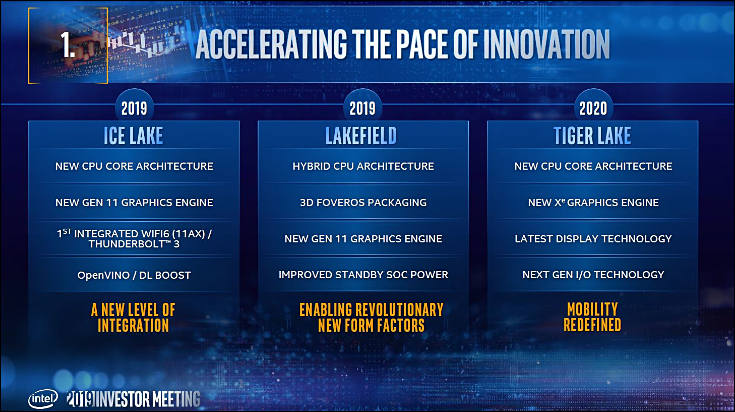

The Tiger Lake CPU family is anticipated to hit the market sometime within the 2020 - 2021 timeframe, though I would take this with a grain of salt as the10nm process still has a way to go before full-scale production. Tiger Lake is set to be the successor to Intel's first-generation 10nm Ice Lake and will take shape as the optimization step within Intel's Process-Architecture-Optimization model as the third-generation 10nm variant built by Intel (10nm++).

It is nice fairy tales, but for now even 10nm can be used for slowest and simplest mobile chips only.

sa11292.jpg735 x 412 - 77K

sa11292.jpg735 x 412 - 77K -

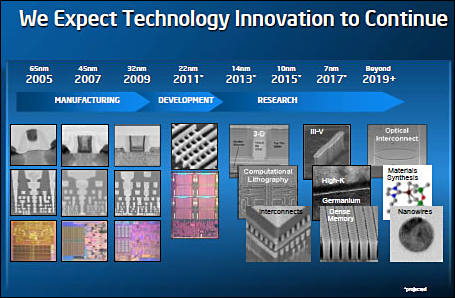

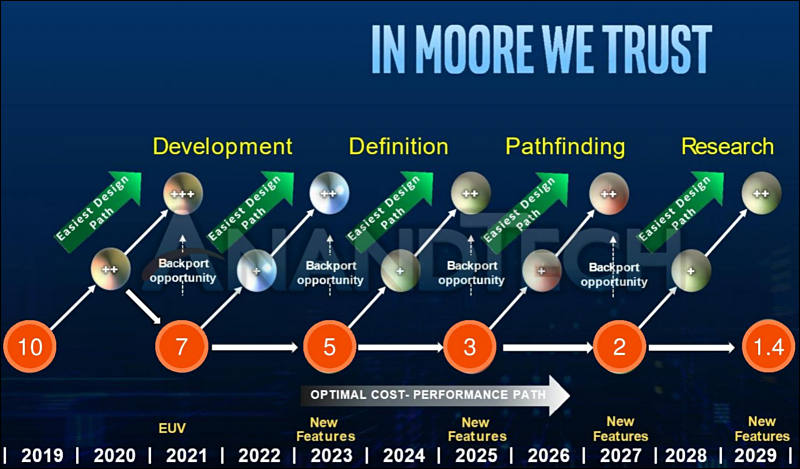

Intel again talked about their 7nm and future 5nm.

Present fairy tale is that they will be able to make may be one product on 7nm in extremely limited batches in 2021-2022. But it is not sure.

5nm in the minds of extremely optimistic Intel management can happen in 2023-24, but may be slightly later. Or not slightly. Or never happen.

Back in time they also had nice expectations

sa11348.jpg455 x 298 - 42K

sa11348.jpg455 x 298 - 42K -

We say [Moore's Law] is slowing because the frequency scaling opportunity at every node is either a very small percentage or nil going forward; it depends on the node when you look at the foundries. So there's limited opportunity, and that's where how you put the solution together matters more than ever.

We've made no announcements on SMT4 at this time. In general, you have to look at simultaneous multi-threading (SMT): There are applications that can benefit from it, and there are applications that can't. Just look at the PC space today, many people actually don’t enable SMT, many people do. SMT4, clearly there are some workloads that benefit from it, but there are many others that it wouldn’t even be deployed. It's been around in the industry for a while, so it's not a new technology concept at all. It's been deployed in servers; certain server vendors have had this for some time, really it's just a matter of when certain workloads can take advantage of it.

It is lot of bad news ahead. As cores increase and SMT doubling are the last general methods industry had for now.

In next years we can see extreme distance between notebooks and smaller computers and powerful desktops.

As with present Threadripper you already need around 400W cooling capability (to max our performance) and another 300-600W for top GPUs. This numbers will double in 2-3 years.

So, look for a case that allows to mount pair of 420 water blocks or may be more.

-

3nm Hutzpah

Continuing with the goal to match or even beat the famous Moore's Law, TSMC is already planning for future 3 nm node manufacturing, promised to start HVM as soon as 2022 arrives, according to JK Wang, TSMC's senior vice president of fab operations. Delivering 3 nm a whole year before originally planned in 2023, TSMC is working hard, with fab construction work doing quite well, judging by all the news that the company is releasing recently.

Lately TSMC seems to be in PR mad mode. Promising things they can't keep left and right to get orders from top players.

It is nice thing to remember that Intel behaved similar just 3-4 years ago. And look that happened now - Intel is quickly talking to Samsung to survive.

Even 7nm current process has lot and lot of issues that clients just hide because of huge profits they get themselves.

-

According to Bob Swan Intel 7nm is equivalent to TSMC 5nm. He also said that Intel 5nm will be equivalent to TSMC’s 3nm.

Bob also talked about Intel’s transitions from 22nm to 14nm to 10nm in very simple terms. 22nm to 14nm had a 2.4x density target which as we now know was a very difficult transition. From 14nm to 10nm Intel targeted a 2.7x density target which led to even more manufacturing challenges. Intel 7nm with EUV will be back to a 2.0x scaling target.

It is just one little tiny problem, Intel do not have even normally working 10nm.

-

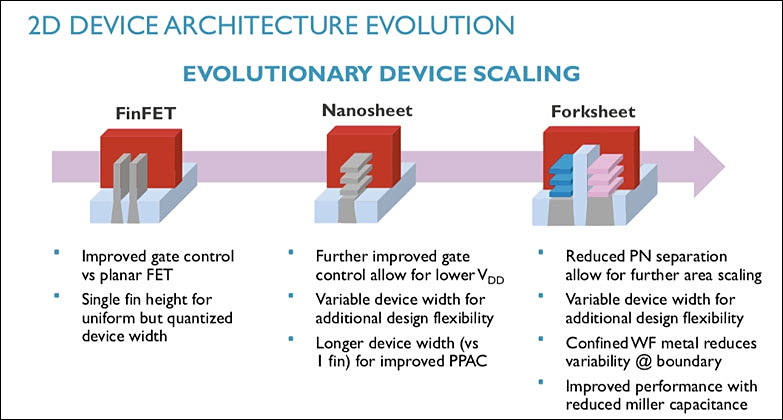

Imec proposal on new transistors structure for 3nm

Samsung in their turn want them to make such:

Structure changes always also mean price hikes.

sa11413.jpg783 x 420 - 57K

sa11413.jpg783 x 420 - 57K

sa11412.jpg748 x 458 - 34K

sa11412.jpg748 x 458 - 34K -

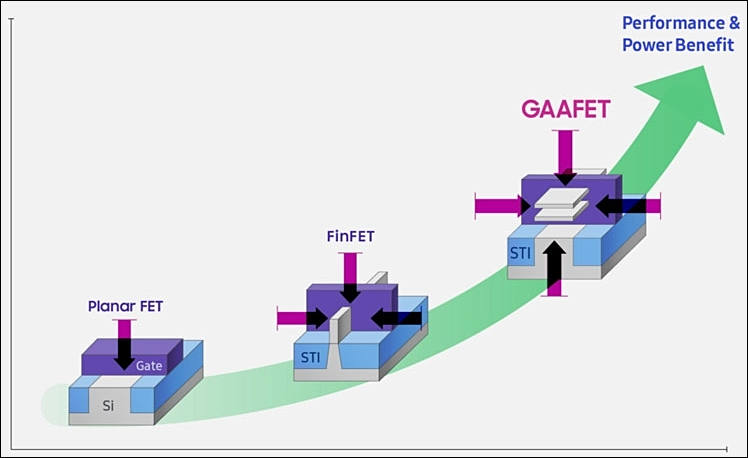

New Intel dreams

This one definitely made under very hard drugs.

sa11417.jpg800 x 469 - 70K

sa11417.jpg800 x 469 - 70K -

Yes, I was just about to post about sub 3nm technology that used modified existing lines, so it could be done more cheaply. I can't remember the name, but night be the one you just posted.

I remotely remember some technology smaller again.

But, what about leakage with these things? Leakage was supposed to be the ultimate restriction before size makes it unviable?.

3D vertical transistors and circuit stacking has been used for many years in the industry. The problem with 3D stacking of parallel circuites particularly, is thermal buildup. Magnetic based processing can do up to a million times less heat, so are better for stacking. You should look at doing a thread on magnetic FPGA? Magnetic Quantum Cellular Automata were an early leading research technology.

-

I couldn't find a direct link to an article on it in my bookmarks, but here are some interesting articles that I found:

https://news.ycombinator.com/item?id=14486437

https://en.wikichip.org/wiki/3_nm_lithography_process

https://newscenter.lbl.gov/2016/10/06/smallest-transistor-1-nm-gate/

-

This looks like the one sorry:

http://news.mit.edu/2018/smallest-3-d-transistor-1207

It just reuses vapour deposition process. The 2.5nm finfets have 60% more performance with a higher on off contrast ratio. They talk about atomic level precision, it makes the transistors layer by layer. I wonder how it would go with new transistor structures.

The thing with low rates of 7nm and lower production is compensatable, by charging extreme extra for the small quantities to whoever wants to pay for it. So cutting edge applications, and the military might pay big dollar to suck those up. But, as a general mass product, that is difficult to justify big extreme prices for an extra 20% or so benefit. However, if you had a 1 nanometer circuit working at full speed today in even 1000 unit sucessfull production run, you could virtually auction them off to the highest bidder, 10k+ better for the military I would imagine.

-

Military orders play almost zero role in financing latest processes, as they use much older ones, for most products very old.

Around 80% of financing is being done by buyers of mid to premium smartphones, all the rest by datacenters and supercomputers.

-

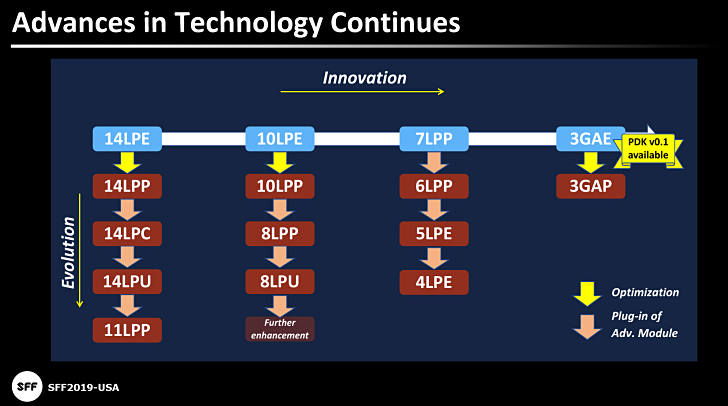

And here we have Samsung with their little fantasies

sa11422.jpg728 x 406 - 43K

sa11422.jpg728 x 406 - 43K -

? I've had an FPGA technology that looked promising for 5Ghz+ around 14 years ago, disapear into military only. This has happened a few more times to me to. I see a technology, and think thank you very much, I can use that, only to find out thar it gets taken by the military. In patenting, they have procedures where if it has certain military use, it is auto diverted to government and defence companies. Metal Storm were famous for slipping past this patent procedure, which makes me wonder how they did that, as I'm pretty sure they were doing cutting edge weapons systems before that, and one of my teachers was an ex Cornel I think used to work there previously.

Existing stabilised rugged radiation hardened, are all reasons to keep using standard tech, but a z80 doesn't have much place being the brains running an aircraft sized auto drone.

-

Well, you don't need to know secrets to understand that military play only very minor role in major areas. They can be good in certain small niche things.

Btw, tales of optical processors are present even in old literature. Issue with them is that you can't make them dense and you can't make good proper reflections along complex long path, I am not even talking about lack of light alternatives for many elements presented in any modern CPU.

This thing is similar to present quantum CPUs advanced scam, where huge resources are used to repeat same thing without any real progress. But it is lot of news and "products".

Issue is that all modern tech is very social thing, requiring international cooperation and big amount of people, hundreds of thousands usually. Same goes for resource usage.

FPGA 5Ghz+ tech went same way Intel 7Ghz claims went. People at the time had very bad understanding of smaller transistors work and lot of small stuff that made frequency wall.

-

Exactly my point Vitaliy. They pickup niche things that will give them.exclusive lead. Cost effectiveness plays a major role in what processes get supported in the commercial realm, but certain players pay high amounts for performance effectiveness in unviable technology. So, if I had 1 nm technology that was ten times more costly than normal tech to make, they would line up to pay 100x the end price.

Anyway, that FPGA had three times the potential over 5Ghz, and it got signed exclusively to military. At the time I think Intel had sold 5Ghz CPU and someone had overclocked something way way past that using liquid nitrogen or something. It wasn't conventional chip technology. And on GHz claims, individual circuit elements maybe doing a lot more than the entire synchronised clocked circuit. The guy I mentioned had some part in his circuit running ar 5Ghz or something way back, but the chip ran at less than 500 MHz, or something like that. The FPGA people were looking at 5ghz FPGA running in a period of 500Mhz FPGA in that period, but that is likely to yield a complex circuit many times less in speed rather than a Pentium 4+. Still sad though.

There are lots of advanced technology out there that didn't gain a lot of traction or more funding because of cost effectiveness, or niche, like saphire chips. Litter left over from the struggle forwards. One that didn't was a form or 100QE OLED like technology, that the military funded, likely for lighting purposes to extend energy reserves. That had been used now commercially for years in consumer. A number of things get initial development and funding from US government before being set free. Of 'scams', OLED eventually turned out over decades and it is still a bit underwhelming with certain deficiencies. I suspect that in chips certain things will turn out in ways neither us or the industry expected. I mean, the way to beat silicon is to go non silicon, and they know how to do that, but people don't want to abandon their expensive equipment and momentum yet. They keep pushing silicon designs uphill instead of investing that money into the alternative. Every memory stick in your computer should also have processing functions, but it is just another option not receiving the attention it should. Present and past experience, is no absolute guide to what can absolute happen in a progressive dynamic situation. The right funding emphasis, and something better pops out. Above I mention an accurate atomic level way of making a 2.5nm circuit using existing lines. Being able to precisely make something is key. So, is such techniques going to lead to sub nanometer circuites one day. Sure it might even be horribly expensive to do that, but if it is the most viable game in town to do that it can thrive. But the question is, can that be applied to make optical components to, with precisions atomic it would be, interesting times ahead of they figure that part out. This becomes meta material optics engineering at that scale. Very exciting potential.

Optical tales, I'm telling optical fact, kept from the commercial market. In my own design proposals, I can see high deficiencies in using optical for conventional design too. So, I put that aside, but there are ways and means to get desirable results in areas. People able to make startrek like free floating holograms might have technology to solve some of the issues and do a good job at it. I've got certain proposals for things which to solve certain issues, but it is a complex mess to miniaturize them down to chip level, but a solution just occured to me. Anyway, just a larger scale optical processor has advantages due to the speed of light and lack of leakage, if you can figure out how to do it properly. But, you don't need a conventional processor to do the animations they were doing.

-

An issue is, why don't we have 10Ghz + Silicon chips, what are the constraints these days? They being saying silicon is going tap out in efficiency due to leakage for a long time, but then they said 7nm then 5nm came up, and the group above is getting gains at 2.5nm. I know in science they deal with absolutes, but in engineering they work around these absolutes, why it's dangerous to take average scientists and engineers as absolutely right now. I've immediately thought of ways to deal with superposition producing leakage way back, but .not in that feild. So, what tricks are they using now?

Now, the highest silicon speed in the research is hundreds of GHz maybe, I can't remember. Surely they should be able to do at least 10Hhz with that? One thing I know from past associates is that silicon has a natural speed and overclocking it produces a lot more leakage. At 180nm I know people that where doing close to 0.06-0.07mw for 600-700mhz with program memory. What the big CPU companies have done is to keep the clicking low in order to deliver more in a certain power envelope. Because they are horribly complex circuites they use a lot more energy per until of speed. I'm hoping that the technology settles down to a natural speed 5Ghz+ for a simple complex circuit. 5Ghz, would be a useful speed for many things. 5Ghz, would be enough for many realtime graphic and video manipulation applications to produce good results in realtime realism in mass processor array. While patralism gets you so far, a certain minium speed is needed to do it properly. I am not confident it is enough, and I previously did calculations related fir my own work. However there is a certain technology where much slower works.

So, I'm interested in how they are dealing with the silicon efficiency constraints, as they are interested in doing optical, Quantum Automata, probably even magnetic, if such a thing exists for silicon, on silicon processes. So, what they can do on silicon sets the size limits for these next gen technologies on silicon. I suppose if I look up the speeds they clock low power memory might be a good indication of where things are at.

-

Again Intel 10nm Issues

It looks like Intel is not just delaying a single server project, their entire roadmap has just slid significantly.

https://www.semiaccurate.com/2019/12/12/intel-significantly-delays-its-entire-server-roadmap/

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,992

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,367

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm101

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320