-

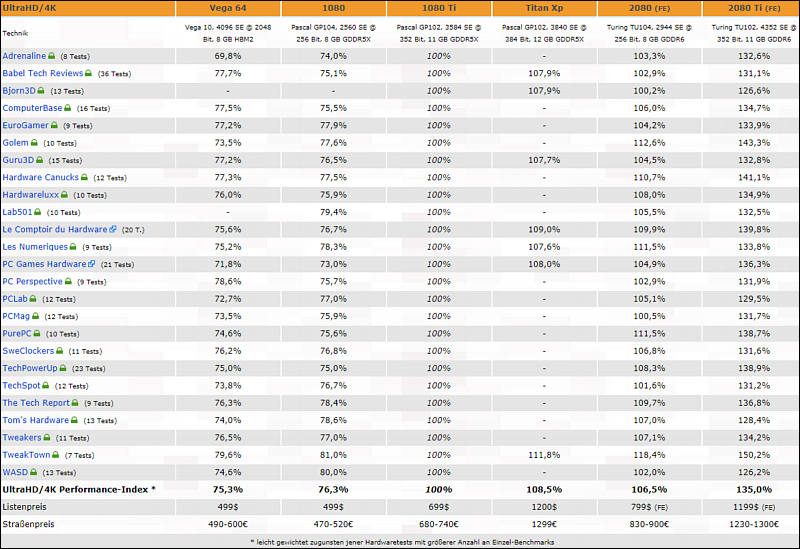

Does this mean that it is a good time to buy 1080Ti graphics cards used on ebay? https://www.ebay.com/itm/MSI-GeForce-GTX-1080-Ti-DirectX-12-GTX-1080-Ti-SEA-HAWK-EK-X-11GB-352-Bit-GDDR5X/173705847028?epid=3012716392&hash=item2871ace0f4:g:Z74AAOSwlINcIGSl

So they are running around $550.00. Is that the move to make?

-

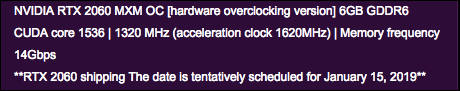

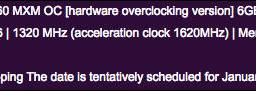

Back to GeForce RTX 2060

- Card will have 3GB, 4GB and 6GB versions

- Also will have older GDD5X and new GDDR6 versions

Total mess.

-

Cheaper card will be called GeForce GTX 1660 Ti

sa6145.jpg745 x 216 - 19K

sa6145.jpg745 x 216 - 19K -

Price of RTX 2060 is rumored to be $400

To be short - go and buy ex mining RX 580 8GB.

-

RTX 2060 will be announced during 2nd week of January

sa6115.jpg761 x 377 - 26K

sa6115.jpg761 x 377 - 26K -

sa6008.jpg800 x 422 - 39K

sa6008.jpg800 x 422 - 39K

sa6009.jpg800 x 333 - 27K

sa6009.jpg800 x 333 - 27K

sa6010.jpg800 x 382 - 41K

sa6010.jpg800 x 382 - 41K -

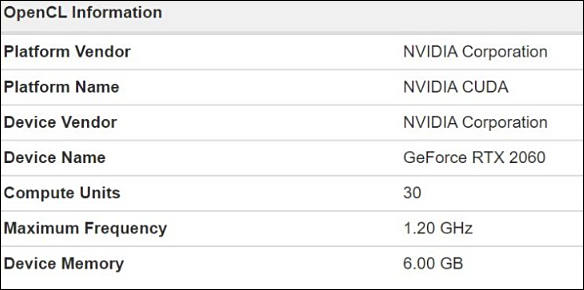

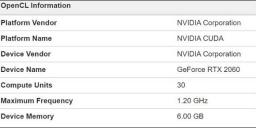

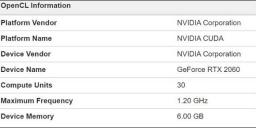

RTX 2060 news

- TU106 LSI

- 30 compute units

- 1920 CUDA Cores, same at GTX 1070 (GTX 1060 6GB had 1280)

Performance is expected to be around 1070 Ti or slightly below.

sa6005.jpg644 x 421 - 37K

sa6005.jpg644 x 421 - 37K

sa6007.jpg584 x 290 - 25K

sa6007.jpg584 x 290 - 25K

sa6006.jpg708 x 258 - 21K

sa6006.jpg708 x 258 - 21K -

Davinci by design (node based, tend to add constant features with slow code) is worst possible candidate for such tests.

True. However Node based compositors can (and some do) use GPU acceleration. Fusion however does not, using mainly CPU code (or openCL which mostly falls back to CPU).

Most image transforms should be calculated with shaders. Shaders can handle high bit depth color, and are super simple to implement.

Up until now, main issue with shaders was that raw shader code was not compiled, hence any user could easily be discovered. Vulkan allows compiled shaders, so I suspect that more proprietary softwares will embrace this technology now.

-

Well, guys focus on 4K with artificial setups, like adding 3 slow plugins and 4 tracking areas.

And they directly are interested in certain tests outcome (with different outcomes they'll go burst), hence all the parameter choosing.

Davinci by design (node based, tend to add constant features with slow code) is worst possible candidate for such tests.

In reality if you write NLE proper 1050 Ti works perfect with 4K at 60fps with one tracking area and color grading+sharpness and other stuff.

-

Gtx 2080 ti gives performance like titan v. I'm going to buy it.

-

On DLSS thingy

-

Nvidia promised another 10% price hike blaming them to US new fees.

Nice news.

-

35% boost, not bad. And you also get amazing ray tracing performance and DLSS AI-powered anti-aliasing. In the future when games/applications supports these, the performance boost is much bigger.

-

Well, bad

-

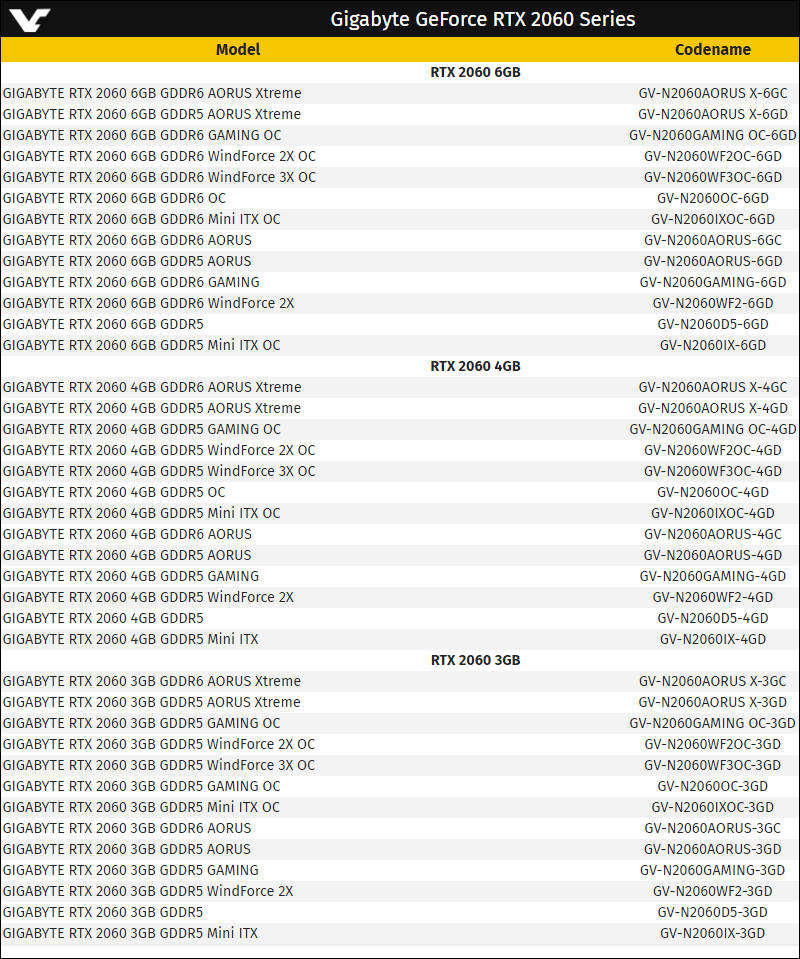

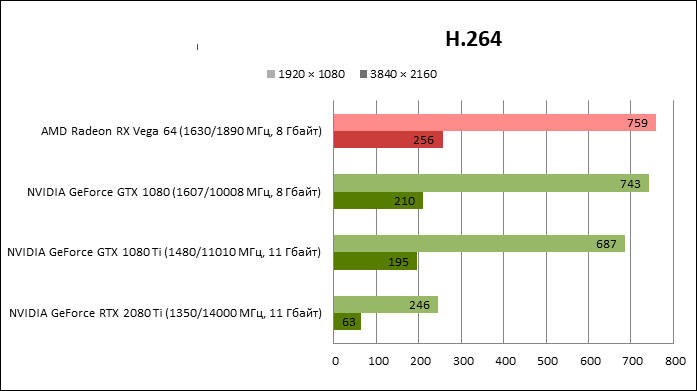

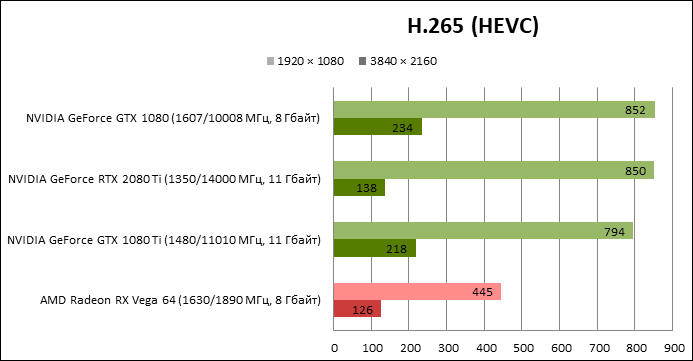

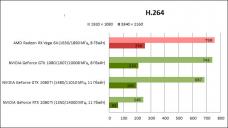

Most interesting that happened to H,264 decoder - current drivers limit decoding block frequency

sa4699.jpg697 x 391 - 33K

sa4699.jpg697 x 391 - 33K

sa4700.jpg693 x 361 - 33K

sa4700.jpg693 x 361 - 33K -

Ray tracing is amazing.

-

Actually it is whole point of Turing that they can do some. So, for mixed they are much better.

Only real advantage can be that some of video filters can be made with FP16 option.

-

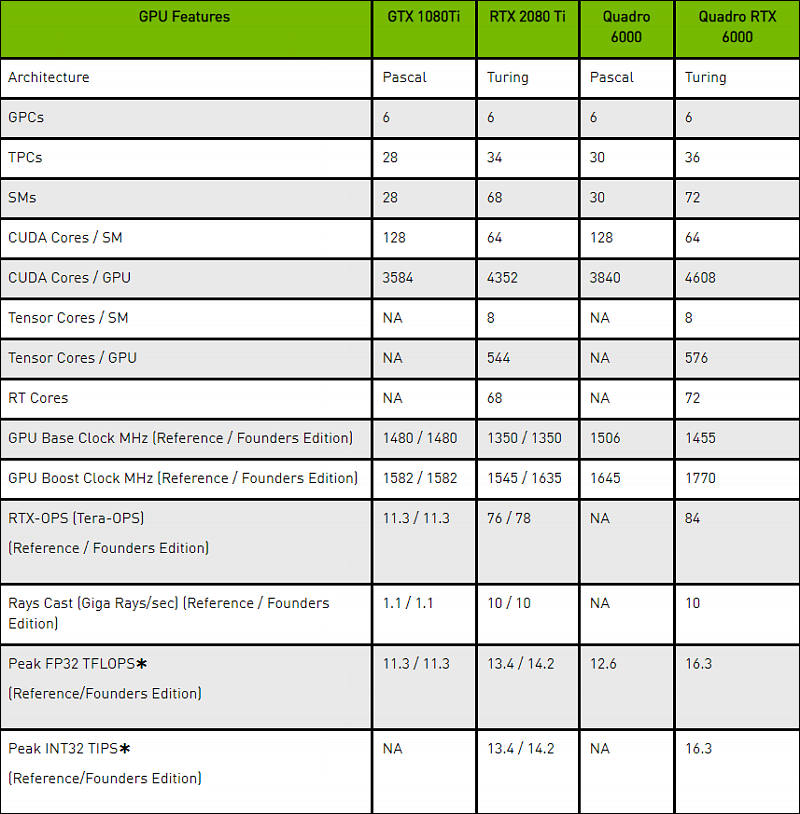

Funny numbers: Those INT32 numbers are total BS! (*)

Looks like they just feed 32bit integers into the 32bit floatingpoint unit, which is no problem (FP is more complicated), just to get a new entry into this "feature list" and "show" that the new cards a sooo much better. I bet the old ones can do that as well.

(* If the new cards can do INT32 and FP32 in parallel, each running at 13-16 TFLOPS at the same time, than I'm sorry and my post is BS... but I highly doubt it.)

-

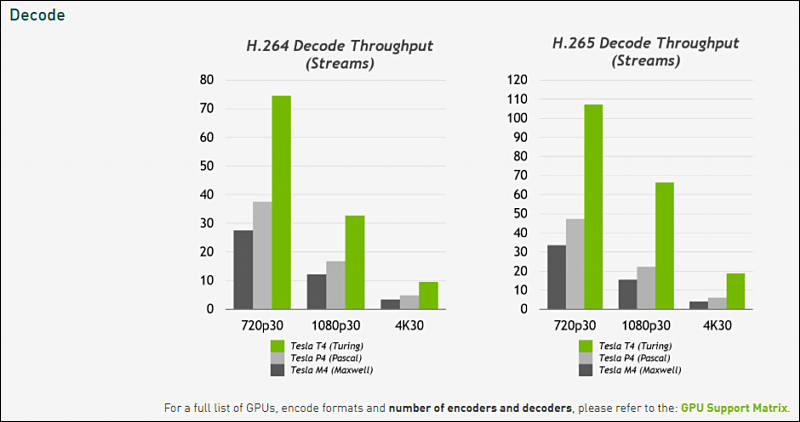

Architecture in depth

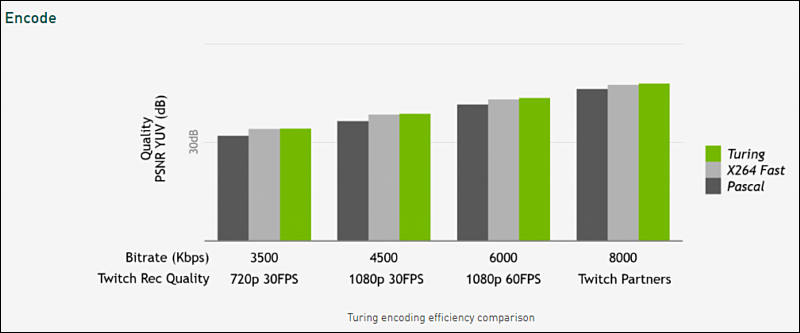

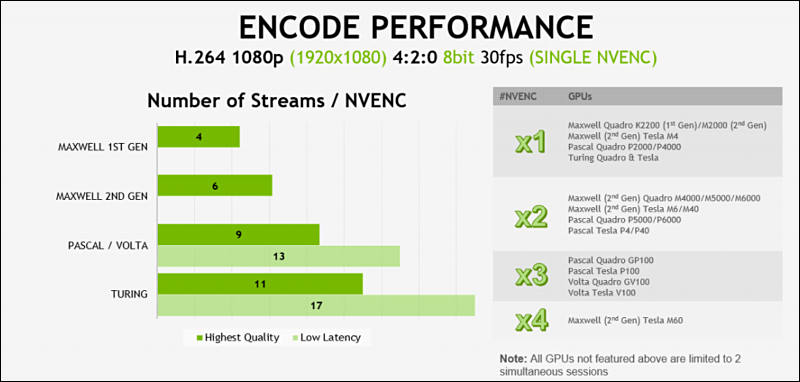

For video applications advantages seems to be minimal, except better encoders and HDR.

https://devblogs.nvidia.com/nvidia-turing-architecture-in-depth/

sa4666.jpg800 x 814 - 113K

sa4666.jpg800 x 814 - 113K

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,990

- Blog5,725

- General and News1,353

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,366

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm100

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320