-

AMD is expected to unveil its upcoming Vega based graphics cards at this year’s Computex on 31st May.

First card will be called the RX Vega Core which will start at $399. It will deliver performance on par with or better than Nvidia’s GeForce GTX 1070.

RX Vega Eclipse which will be priced at $499 and will compete head to head with the GTX 1080.

RX Vega Nova will be the Big Vega that will retail at $599 and rival the GTX 1080 Ti.

http://digiworthy.com/2017/05/14/amd-rx-vega-lineup-fastest-vega-nova/

-

Decoding speed added to

- https://www.personal-view.com/talks/discussion/16925/h.264-decoding-and-nles#Item_4

- https://www.personal-view.com/talks/discussion/16921/hevc-decoding-and-nles#Item_15

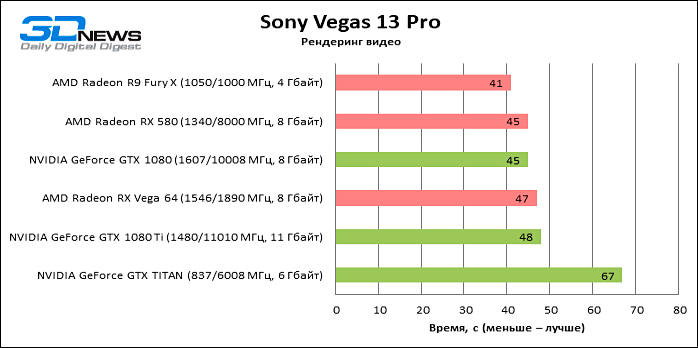

Vegas 13 shows behavior of old NLEs without any HW encoding and not much GPU support

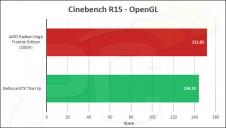

s212.jpg698 x 348 - 39K

s212.jpg698 x 348 - 39K -

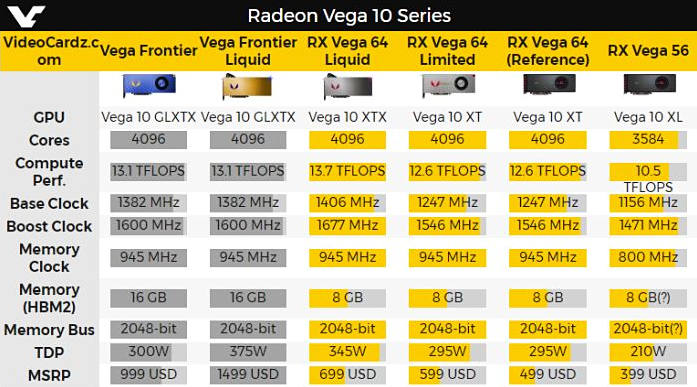

Finally some official info

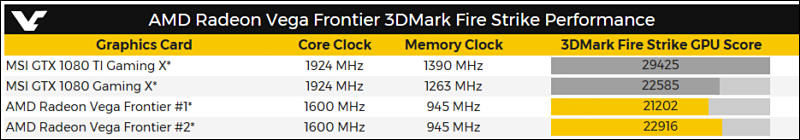

Performance will be slightly below 1080 for 64 version and around 1070 for 56.

sample1032.jpg347 x 301 - 20K

sample1032.jpg347 x 301 - 20K

sample1033.jpg697 x 387 - 74K

sample1033.jpg697 x 387 - 74K -

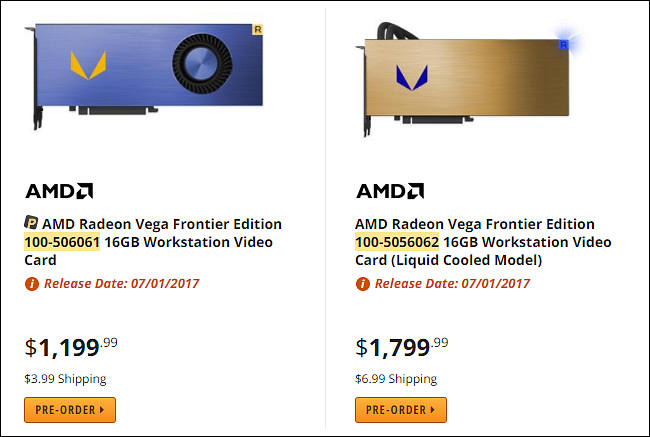

Preliminary info

- AMD Radeon RX Vega 64 1247/1546MHz -> $499;

- AMD Radeon RX Vega 64 Limited Edition 1247/1546MHz -> $549;

- AMD Radeon RX Vega 64 (Liquid cooler) 1406/1677Mhz -> $599;

- AMD Radeon RX Vega 64 Limited Edition (Liquid cooler) 1406/1677Mhz -> $649.

-

It may not be as bad as some initial reviews seem to show. Seems like AMD are running these with lots of voltage, users have managed to undervolt and maintain 1600 mhz clocks with relative ease.

https://www.reddit.com/r/Amd/comments/6n7etd/it_took_me_all_of_5_minutes_to_get_this_with_my/

With the more efficient cooler designs and better RX drivers we could end up seeing some decent performance at under 300W power draw. I'd be happy with a small improvement over Polaris architecture in terms of efficiency.

-

Would be nice to put the Vega in a custom loop. Then one could cool multiple Vegas at same time, and also get single slot form factor.

-

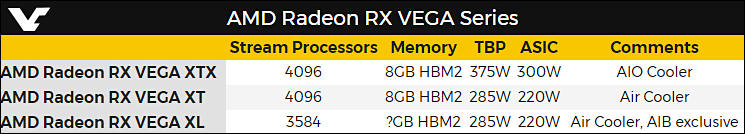

More news before announcement

AMD will be forced to drop clock rates and main cards will be not top XTX.

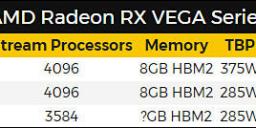

sample875.jpg745 x 134 - 41K

sample875.jpg745 x 134 - 41K -

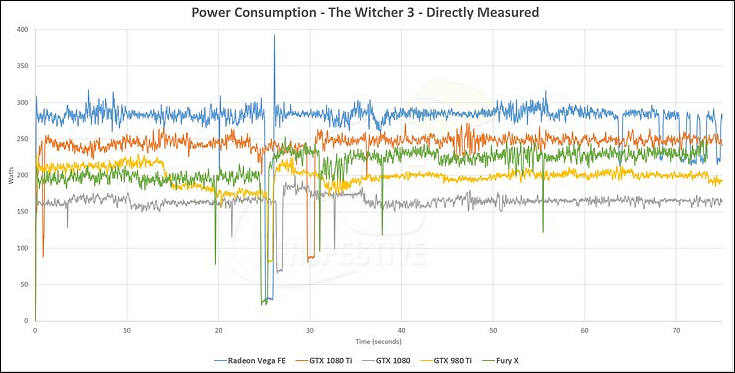

Power consumption

Games

sample782.jpg735 x 373 - 52K

sample782.jpg735 x 373 - 52K

sample783.jpg800 x 408 - 61K

sample783.jpg800 x 408 - 61K -

Seems exactly like scaled up 580, performance per ACE is same.

sample761.jpg600 x 378 - 34K

sample761.jpg600 x 378 - 34K

sample762.jpg601 x 340 - 22K

sample762.jpg601 x 340 - 22K -

Yeah, it's definitely gaining notice from both managers and investors alike. I'm a little worried we could end up with a sharp divergence between gaming and mining cards in the future that results in the best silicon going toward mining.

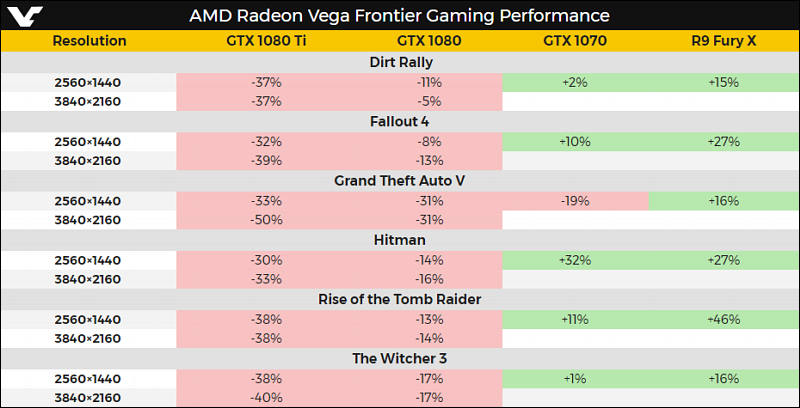

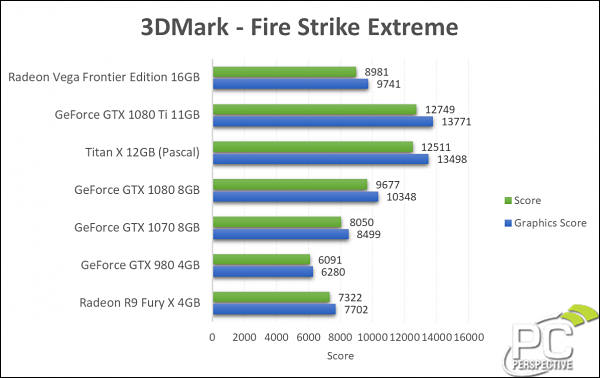

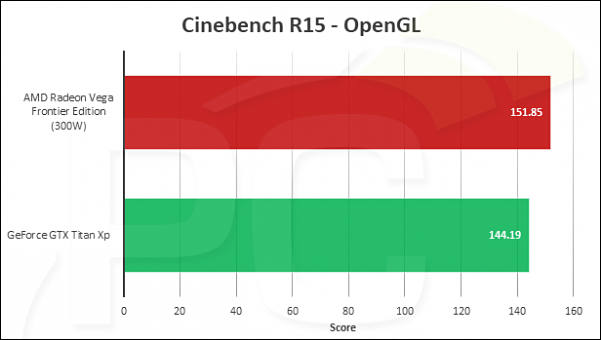

Here's the PC PER review for the Frontier Edition w/benchmarks. https://www.pcper.com/reviews/Graphics-Cards/Radeon-Vega-Frontier-Edition-16GB-Air-Cooled-Review

-

If you ask me, looking for GPU sales charts it is quite clear that both companies had been very interested in all craze.

And I am sure they provided clear instructions to sponsored sites, channels (around 80% of all big ones) to cover crypto topic in specific sense.

-

I watched the PC Perspective Vega benchmark livestream and it was interesting to see how closely FE scales with last gen Fury X... practically clock for clock. There doesn't seem to be any noticeable architecture gains associated with the gaming benchmarks - unless AMD are holding back some amazing set of gaming drivers just for the RX Vega reveal.

The clocks hang at around 1440 Mhz due to throttling on an open test bench in an air conditioned room. Power draw is just under 300 watts. By comparison, the GTX 1080 has near identical FPS numbers while only using 180W of power.

Vega also doesn't appear to be particularly good at mining... barely faster than Polaris 10/12 but with exactly twice the power requirement.

A "4K card for $400" sales pitch seems like the best way to salvage this thing from a marketing standpoint. Otherwise folks without freesync displays may opt to pay the $499 for a GTX 1080 knowing they'll make up the difference in power savings in the long run.

AMD is lucky from the standpoint that many gamers are now in a wedge because essentially no capable new or used cards are available for less than $400. They will essentially be forced to buy an inefficient GPU if it is all that is available on the market. You couldn't engineer a better set of circumstances to help bail them out of this blunder.

-

@Vitaliy_Kiselev Ha... that does seem to be the new standard in Resolve benchmarks - Give us more blur FPS or that card is total shit. More blur and more grain!

-

Who knows.

One thing that I know is that guys will need to add another 20 nodes of extreme blur and such to Davinci benchmarks :-) I mean to simulate "real" projects.

-

Sources say that heat produced can overcome famous 290X.

Gaming cards will consume in stock 300-370W and small overclock makes it above 400W.

-

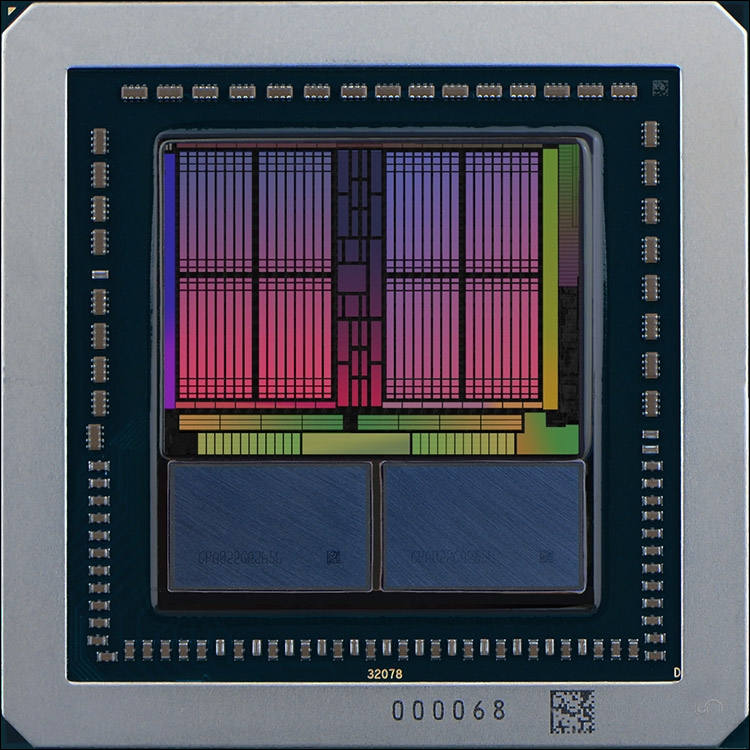

For many years GPUs have been optimized to process 32-bit floating point and integer data types, since these were best suited for standard 3D graphics rendering tasks. However as GPU workloads and rendering techniques have become more diverse, this one-size-fits-all approach is no longer always the best one. The processing units at the core of the “Vega” GPU architecture have been updated to address this new reality.

Next-Gen Compute Units (NCUs) provide super-charged pathways for doubling processing throughput when using 16-bit data types.1 In cases where a full 32 bits of precision is not necessary to obtain the desired result, they can pack twice as much data into each register and use it to execute two parallel operations. This is ideal for a wide range of computationally intensive applications including image/video processing, ray tracing, artificial intelligence, and game rendering.

New intelligent power management technologies adjust to your workload, providing thermal headroom to optimize peak performance and system acoustics. Advanced power agility features enable optimized performance during bursty workloads that are common in professional applications.

sample453.jpg750 x 750 - 101K

sample453.jpg750 x 750 - 101K

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,993

- Blog5,725

- General and News1,355

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,367

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm101

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320