-

Hi there,

Any recommendation on the workflow for broadcast. The sound source is a mono microphone.

At the moment I start by converting in stereo and then normalising it; I am struggling with the effects as which one to use for compression.

The sound I record is usually interviews in public places which sometimes has background noise. And on some occasions I record live street music.

I'd love to hear your views. ( I am a newbie with audition and sound engineering)

Thanks, Hakura

-

Compression - now that's a kettle of fish :)

First - a good read http://www.barryrudolph.com/mix/comp.html understand what a compressor is and is used for. Not all audio need compressing, depending on the end result required, riding the volume (essentially what a compressor is doing for you) on the dialogue track yields far less artifacts (pumping, clipped fronts of words and background noise) and more pleasant results and should be the first port of call before you roll out the track slammer :)

Compressors used correctly can help, but also can butcher dialogue if thrown on without a bit of planning. Best thing to do is experiment with your DAWS compressor(s) and A/B the dialogue regularly to make sure it's not becoming flatter than a flat thing, leaving a bit of dynamic in the voice and gently squeezing can add density without pumping.

Lots of videos in youtube e.g.

Multiband compression is another story!

I'd skip the normalising as that will rob you instantly of headroom and save that (if using it at all!) until the end when you have levelled out the elements in the mix.

-

Thanks a lot soundgh2, this indeed is good starting lesson for beginners. I am going to play with the settings now and update here. ( I actually started playing with multiband compression before posting here, so I will put that on hold)

-

@soundgh2 This is a really nice explanation from someone who knows his stuff - both your text and the video. In the video you linked to, it's great to see someone using visuals and speaking in an engaging and concise way. Rare on YouTube!! Would have been lovely to hear the instruments and the effects on them but that's a tiny niggle. Must go off and see what else he's uploaded.

-

Compression is the untameable beast but the thing used correctly can help a lot - hard art to learn and never stop learning new tricks using it - parallel compression, sidechain ducking, sidechain multiband ducking, sidetone triggering etc etc endless! Everyone has their favourites hardware and software that do the job - for me love the Chandler Abbey Road box - amazing just makes stuff sound ... better lol, and software use the C4/6, Sonnox and Digi one 90% of the time, after messing with all of em - too many, and most do sound close to the originals - but usually the people sat there next to you bored want you to get on with it, mix and lay-off lol

Hardware boxes have their own life too - have 2 1176s that sound soo different could be completely different brands, and only 3 S/N apart - in the end lend an ear to it - if you like it - result!

-

I actually rarely use compression, isn't that awful? I just split up the sound into objects and process each one individually. But I sometimes use "parallel" compression, mentioned above. The attribution of this to "New York" engineers is extremely dubious, but it involves setting up another set of tracks. I also often add really dense, really short reverb.

-

Audition CC as a Loudness Correction Appliance

Het guys, this is maybe a stupid question: would it be there much of a difference between

A} pre-correcting and mixing to -23LUFS and

B} just normalizing (RMS) after mix is done?

Also if I have to appease the waves would a limiter be the tool to use? As context I want to record system audio (i.e. internet-radio, etc) which already comes very compressed and "hot" (?)

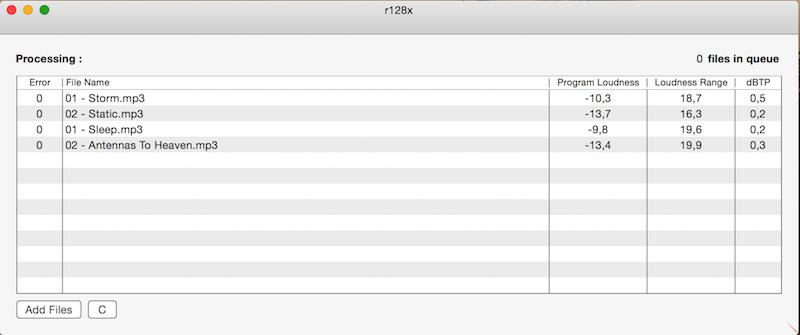

BTW I found this open source tool handy for batch analyzing (loudness in) files *-)

r128x, a tool for loudness measurement of files on Mac OSX Intel

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,992

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,367

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm101

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320

Tags in Topic

- broadcast 13

- compression 12

- audition 3