-

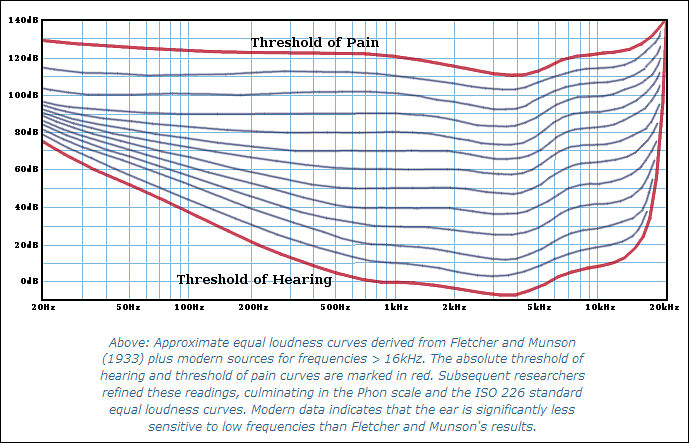

The upper limit of the human audio range is defined to be where the absolute threshold of hearing curve crosses the threshold of pain. To even faintly perceive the audio at that point (or beyond), it must simultaneously be unbearably loud.

At low frequencies, the cochlea works like a bass reflex cabinet. The helicotrema is an opening at the apex of the basilar membrane that acts as a port tuned to somewhere between 40Hz to 65Hz depending on the individual. Response rolls off steeply below this frequency.

Thus, 20Hz - 20kHz is a generous range. It thoroughly covers the audible spectrum, an assertion backed by nearly a century of experimental data.

192kHz digital music files offer no benefits. They're not quite neutral either; practical fidelity is slightly worse. The ultrasonics are a liability during playback.

Neither audio transducers nor power amplifiers are free of distortion, and distortion tends to increase rapidly at the lowest and highest frequencies. If the same transducer reproduces ultrasonics along with audible content, any nonlinearity will shift some of the ultrasonic content down into the audible range as an uncontrolled spray of intermodulation distortion products covering the entire audible spectrum. Nonlinearity in a power amplifier will produce the same effect. The effect is very slight, but listening tests have confirmed that both effects can be audible.

Read more at http://people.xiph.org/~xiphmont/demo/neil-young.html

-

There are indeed surprising double blind studies that show people cannot tell the difference between 44.1/16 dithered and higher rates. The 192khz rate does have value when playing back both 44.1khz and 48khz lossless material on the same system. If the driver is run at 192, there is an audible transparency and smoothness in the breathy high frequencies of 44.1 (as compared with a 96khz driver). As far as storage, there are good post filters, such as izotope, which allow high quality transfer between 48 & 44.1. The main reason to store in higher rates is to slow down audio in post, or to lessen the amount of 'work' required of the filter to go between 48 and 44.1. Otherwise, the higher frequency data can literally cause problems..

-

If the driver is run at 192, there is an audible transparency and smoothness in the breathy high frequencies of 44.1 (as compared with a 96khz driver).

What do you mean? By idea, good external USB DAC can accept set of frequencies (as is true for SPDIF), so all will be ok both at 44.1Khz and 48Khz.

-

Indeed, all frequencies can be played back on the system; to preserve the full higher frequency detail, the finer peaks of the wave must be in phase with the playback speed, which is dependent on the quality of the filtration 'the magic'. On the fly syncing falls short o' the best-

http://thenoisegroup.com/audio-tech-blog/incredible-tool-comparing-sample-rate-converters/

-

Indeed, all frequencies can be played back on the system;

Huh? Most speakers can't play "all frequencies". And moist ears can't hear them either.

to preserve the full higher frequency detail, the finer peaks of the wave must be in phase with the playback speed,

What this means? As it sounds like mumbo jumbo.

-

"Huh? Most speakers can't play "all frequencies". And moist ears can't hear them either."

The DACs you referred to (not the speakers). All ears cannot hear above 22 - except for audible distortion caused by higher harmonics interacting

"What this means? As it sounds like mumbo jumbo."

When the playback rate is out of sync, the wave peak points are out of sync, as the playback sampling regularly falls between the peaks at the highest frequencies.

-

When the playback rate is out of sync, the wave peak points are out of sync, as the playback sampling regularly falls between the peaks at the highest frequencies.

Again, Explain it simpler. Playback is out of sync with what? Wave peak points are out of sync with what? "playback sampling regularly falls between the peaks at the highest frequencies" what is it?

-

To fully capture a wave, two points must be recorded; the crest (peak) and the trough (lowpoint): http://9-4fordham.wikispaces.com/file/view/wave_crest.gif/243891203/wave_crest.gif

Thus, the sampling frequency, or how often a recording 'checks in' to take a sample, must be twice the highest frequency of the wave which is desired to be captured. [on another loosely related note, the bit depth describes the resolution of the height dimension of that sample, or amplitude]

But what happens if the rate of playback is fractionally different than the rate that the recording was sampled at? That is, not a clean division like a half (.5), but something like .918? The audio will alias. And thus, a filter is required to get clean results - and like a bad olpf, if the filter is not good, the higher frequencies (like high optical detail) will suffer http://en.wikipedia.org/wiki/Anti-aliasing_filter

-

But what happens if the rate of playback is fractionally different than the rate that the recording was sampled at? That is, not a clean division like a half (.5), but something like .918? The audio will alias. And thus, a filter is required to get clean results - and like a bad olpf, if the filter is not good, the higher frequencies (like high optical detail) will suffer

Huh. Problem is that you go back to recording and mastering side of things. In resulting music it make little sense. In recording it is very common to use 96Hkz and up and 24bit and for good reasons. Whole article in the link is about different things.

-

Yes, I agree.

"The main reason to *store (record) in higher rates is to slow down audio in post, or to lessen the amount of 'work' required of the filter to go between 48 and 44.1. Otherwise, the higher frequency data can literally cause problems.."

A good note in the anti-aliasing article: "The purpose of oversampling is to relax the requirements on the anti-aliasing filter, or to further reduce the aliasing. Since the initial anti-aliasing filter is analog, oversampling allows for the filter to be cheaper because the requirements are not as stringent, and also allows the anti-aliasing filter to have a smoother frequency response, and thus a less complex phase response."

Though for film dialog, the recording standard is 24bit/48Khz, as slowdown and export to the Redbook standard is not expected

-

The purpose of oversampling is to relax the requirements on the anti-aliasing filter, or to further reduce the aliasing.

Article I linked to also explains that all modern DACs make oversampling. Also many receivers make oversampling (for some it can be changed or turned off/on, for others it is always on as required by DSP).

-

Yes, the article is excellent

-

This only matters when performing math operations on the audio files. Higher precision for lower loss during filtering, etc.

It's also best to either use 24/44.1K or 24/88.2K so that your operations don't take extra steps only to truncate the words to fit the busses within the computer system.

-

You have to realise that when playing back a CD, the amplifier is usually set so that the quietest sounds on the CD can just be heard above the noise floor of the listening environment (sitting room or cans). So if the average noise floor for a sitting room is say 50dB (or 30dB for cans) then the dynamic range of the CD starts at this point and is capable of 96dB (at least) above the room noise floor. If the full dynamic range of a CD was actually used (on top of the noise floor), the home listener (if they had the equipment) would almost certainly cause themselves severe pain and permanent hearing damage. If this is the case with CD, what about 24bit Hi-Rez. If we were to use the full dynamic range of 24bit and a listener had the equipment to reproduce it all, there is a fair chance, depending on age and general health, that the listener would die instantly. The most fit would probably just go into coma for a few weeks and wake up totally deaf. I'm not joking or exaggerating here, think about it, 144dB + say 50dB for the room's noise floor. But 180dB is the figure often quoted for sound pressure levels powerful enough to kill and some people have been killed by 160dB.

:-)

-

Great article. However, I don't know anybody who sets their amplifier the way suggested in the last quote you posted:

You have to realise that when playing back a CD, the amplifier is usually set so that the quietest sounds on the CD can just be heard above the noise floor of the listening environment (sitting room or cans). So if the average noise floor for a sitting room is say 50dB (or 30dB for cans) then the dynamic range of the CD starts at this point and is capable of 96dB (at least) above the room noise floor.

Most people seem to set their amplifier so that the loudest sounds are below a determined threshold which varies according to the situation. For example, night club and rock concert operators seem to set things as close to the physical pain threshold as possible (and often above the threshold for hearing damage). Apartment dwellers throwing a party crank things to just below the threshold where their neighbors will call the police. "Background" music will be amplified to a point where the loudest sounds still won't overpower conversation. Etc.

The quietest sounds on the CD will therefore inevitably fall well beneath the noise floor of the listening environment, even assuming the full dynamic range of a CD were being utilized. However, most mastering these days (see "loudness wars") seems to aim for reduced dynamic range such that even the quietest portions of the music are only slightly quieter than the loudest.

Anybody who actually set their amplifier as suggested here, playing back any commercially produced CD would destroy their equipment and/or hearing within seconds.

-

However, most mastering these days (see "loudness wars") seems to aim for reduced dynamic range such that even the quietest portions of the music are only slightly quieter than the loudest. Anybody who actually set their amplifier as suggested here, playing back any commercially produced CD would destroy their equipment and/or hearing within seconds.

I think here you have logical flaw. Most modern CDs have reduced dynamic range due to compression ( we have post about it here, use search). So it won't be any problem for such setting.

Statement in the quote is mostly correct as whole loudness wars started (in mass) as answer to high background noise levels.

-

I have to disagree that loudness wars started as answer to background noise levels. It started out of continuing competition for outstanding sound compared to other companies, and the fact that human hearing percieves louder sounds as being "better" or atleast more sonically full (we hear more frequencies more evenly at louder volumes)

With digital, we have quieter noise floors than ever, and loudness war has been getting worse.

-

It started out of continuing competition for outstanding sound compared to other companies, and the fact that human hearing percieves louder sounds as being "better" or atleast more sonically full (we hear more frequencies more evenly at louder volumes)

Please, read it carefully. In mass it started with people going mobile with high background noise. With such listening conditions you MUST use compression as keeping dynamic range either kills music even more or kills someones hearing.

While it is perfectly true that we like louder sound more and that compressed sound were widely used already before mobile players(but not so in mass), main reason here is also radio stations who wanted to be better... in the cars.

-

I would say more compressed sound was pretty well popular before mobile devices like ipods and phones became commonplace. Upload a commercial track from 80s then upload a commercial track from the 90s... that's probably the most drastic difference you'll see, and it was a bit before the mobile took off too much.

I misunderstood when you said noise floor, I thought you meant the noise floor of the track/recording itself. I agree that you do need more compression for different environments – it's like, when you watch a movie on an airplane vs in a theatre, you definitely want a lot more dynamic range in one and not the other.

-

Mobile started from this - http://en.wikipedia.org/wiki/Walkman and car/mobile radios , not iPod :-)

-

@vitaliy The very high sampling rates make most sense to male hearing.

As a very simple experiment, if you have someone stand 2 metres behind you and click their fingers most men can detect when the clicking has moved about 30cm. If you do a little trig and math you find that the time difference between click arriving at the left and right ear is very small, double it for Nyquist and 2Mhz sampling rates start to make sense.

The time difference is : 0.0000657 seconds.

It is believed that one of the reasons that good vinyl can sound better than CD is that the timing differences between the left and right channels are better preserved.

-

As a very simple experiment, if you have someone stand 2 metres behind you and click their fingers most men can detect when the clicking has moved about 30cm. If you do a little trig and math you find that the time difference between click arriving at the left and right ear is very small, double it for Nyquist and 2Mhz sampling rates start to make sense.

LOL.

I hope you are jocking. It is not true. Including very bad logic and math defects in your statements and not understanding how localization works.

First. Click on the article and read how our ear works, can check medic literature after it.

Second. Put "HRTF" in the google and spend some time reading that it is. To be short, time difference is not the only thing that is used for localization.

Third. With time difference. Huge flaw is that you mixed all things without properly understanding that they mean and make "shocking" conclusion. Hope after reading flaw with mixing wrong things will become clear.

It is believed that one of the reasons that good vinyl can sound better than CD is that the timing differences between the left and right channels are better preserved

You are joking here again :-)

Try to read how vinyl player work, it'll just become obvious why it sounds always inferior to any good CD player (under good I do not mean "high cost", just designed properly).

-

Where I wasn't clear is that it 2Mb/s DSD starts to make sense, 192/24 is about 9.2Mb/s (Stereo).

Your link in a step leads me to: http://en.wikipedia.org/wiki/Interaural_time_difference

Which suggests that it is either time or phase that is being detected, time for impulse, phase for tones, particularly above 1500Hz (ITD and ILD). A click is an impulse.

I'm of the belief that the high sample rates help to preserve the time and phase differences that we can detect. The previous assertions have been about volume and hearing frequency range.

The whole clicking experiment was in 'Audio World' several years ago and out of interest I tried it for myself.

Your link also leads to : https://www.meridian-audio.com/ara/coding2.pdf in which Bob Stuart says:

"Other listening tests witnessed by the author have made it quite clear that the sound quality of a chain is generally regarded as better when it runs at 96kHz than when it runs at 48kHz, and that the difference observed is ‘in the bass’. "

This ties up with a lecture from Richard Lord I attended a good few years ago. Bob Stuart's conclusions are on page 19, the rough synopsis is that 58K/20bit

I have vinyl, CDs, DSDs, SACDs and DVD-As at home. I agree that vinyl struggles against CD in many aspects. I have a 1960 recording of 'Take-5' which was transcripted from 30ips tape to DSD which shows me that great audio was available back then. I also have a SACD from a Police recording (Sting,Summers,Copeland) which I find very unpleasant to listen to.

From my diving medical I know that I have a hearing notch at 12KHz and Tinnitus which by rights should give me pretty poor hearing on a 'golden ear' basis. Therefore I can't argue that much!

-

HRTF - http://en.wikipedia.org/wiki/Head-related_transfer_function

I'm of the belief that the high sample rates help to preserve the time and phase differences that we can detect. The previous assertions have been about volume and hearing frequency range.

Again, nature of our ear do not allow this :-)

The whole clicking experiment was in 'Audio World' several years ago and out of interest I tried it for myself.

OK. In this case make math and logic basis here step by step.

Other listening tests witnessed by the author have made it quite clear that the sound quality of a chain is generally regarded as better when it runs at 96kHz than when it runs at 48kHz, and that the difference observed is ‘in the bass’. "

Doubly blind tests unfortunately show that this guys can't detect 96Khz recordings :-)

-

@sammy Order of Importance: Talent/Performance, Acoustics/mic placement, microphone, Preamp, Convertors.

Of course, sometimes talent, performance, and acoustics cannot be under complete control of engineer, so... yea, I guess I'd start out with good mic.

-

@Vitaliy I'm not sure what you mean with the whole walkman thing – didn't the earlier walkmans and mobile CD players play higher resolution than the mp3 movement?

I wasn't saying it started with ipod... I was just giving that as a popular example.

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List24,065

- Blog5,725

- General and News1,389

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,390

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm102

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras138

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,335