-

I've been using the LA7200 Anamorphic Adapter for several months now and I really like it. Initially, I did some reading and posting around and ended up with an un-stretch workflow consisting of lowering the vertical scale to 76 in FCPX, which looks right and incidentally matches non-anamorphic footage cropped to 2.39:1 using the 'widescreen' or '2.35:1' checkbox in plugins like CineLook and ClassyLook.

There isn't a lot of NLE specific information out there on how to properly un-squeeze, and Voldemort's ebook was a starting point for the math that lead to the above solution. There are some assumptions about sharpness and the way one un-stretches that seem a bit quirky or hard to follow, however.

All of my grabs and little vimeo tests and things up this point have been done that way and I am happy with the results. However I'm soon going to be starting the edit on a little feature, and due to my geographic location I have a pretty solid chance of some manner of theatrical projection and I want to start from the ground up with that in mind in the edit.

A couple of days ago I tried what I thought might be a more 'proper' workflow, which was to run GH2 files through 5D2RGB and then through compressor changing the pixel aspect ratio to 1.333. The result was a clip that looked correct, but when I put it in FCPX, I noticed it was slightly taller than my previous efforts. It matched other clips only if I changed their vertical scale to 80 instead of 76. At this height, however, neither match the 'widescreen' button from the plugins, which I take to be a guide for 2.39:1.

I'm wondering which is 'correct' and/or what am I doing wrong, in every case. Obviously, slight variations in squeeze may pass essentially unnoticed, but there must be a 'proper' mathematically correct process for this.

I also at some point of course need to come up to 2k resolution to meet the DCP standard. It's not a huge jump but I'm wondering if I should incorporate that into my initial workflow as well. There were some posts on the BMC forum recently about uprezzing to 4k that were quite illuminating, it seems that the method and amount of color information can play a big role in how well this is accomplished. I've uprezed GH2 frames to 4k in photoshop with pretty good results so honestly I'm not too worried about the jump from 1920 to 2048. Still, I don't know if FCPX is enough, or Compressor might be better, and/or if both the un-squeeze and up-res can be accomplished at once?

Right now I'm looking at:

1.) High quality intra hacked .mts files into 5D2RGB, outputting ProRess 422HQ (unless we think for my later purposes 444 would be better?)

2.) Where to go from here, for un-squeeze and up-res?

Any help would be greatly appreciated. I believe the anamorphic part of the equation should have a more definitive answer than the net seems to provide.

-

The pixel aspect ratio change likely was showing you the correct aspect ratio for the first time. References to this adapter creating 2.35:1 or 2.39:1 frames once un-squeezing the 16:9 frame are incorrect assumptions. Truthfully, I don't know how they ever got started when the math is right there:

1920*1.33 = 2553.6 ...so... 2554 / 1080 = 2.3648

At this point, depending on where you choose to round and how far you carry the decimals, you're looking at either 2.36:1 or 2.37:1 which means a frame height of either 810 or 814 if you reduce to fit on a standard HD frame. For a reduction to a standard 2K frame you're looking at something more like 2048 x 868 for the scope area. Make no mistake though, you're reducing, not up-rezzing.

I wouldn't suggest doing any of this prior to editing though. You'd just making the whole process less efficient. I would assume FCP could interpret the footage on the fly like you can in CS6 products. There's really no reason to hard-code or bake in the un-squeeze at any point during the process. Let the software work for you. 1.33X footage though, there's really no hardship in viewing squeezed editorial even if for some reason FCP had such an oversight as to not allow you to re-interpret footage on the fly.

I've shifted my own post pipeline for anamorphic so that I'm always working with squeezed files, which is consistent with how digital post is conducted on anamorphic film projects. Maintaining the footage as "squeezed" for as long as possible in the workflow keeps the data more manageable and keeps the quality intact. I only generate a non-anamorphic version when creating a version for upload to the web. In your case this would be in the final render to the DCP JPEG 2K files.

edit: TLDR version, there's no reason to have a 2K workflow, stick with 1920x1080 squeezed files clear through to final grade renders. Then you'll have a version that you can conform at the highest quality to whatever you need and you don't unnecessarily bog down editing or finishing and you never degrade your image quality.

-

Thank you for the informative reply as usual, @BurnetRhoades. I somehow just clicked away from several paragraphs of response after thinking I hit 'post reply' so I going to try again, haha.

Leaving aside for the moment the order in which things are done, I want to clarify a few points.

We're saying that we're really getting closer to the old 2.35:1 scope than the modern 2.40:1, right, which explains why the plugins that have a 'widescreen' button for non anamorphic footage to be cropped are slightly wider than my 'native' pixel aspect ratio change footage with the adapter. This is 'ok', though, with a feature shot 80% with a 1.33x adapter, there is no reason to use anything else than it's 2.36:1 or 2.37:1 ratio, right? Just crop the non-anamorphic footage properly? As long as my horizontal dimensions are correct, any projector will take the footage?

Now, when it comes to the down-res...when I run my 1920x1080 squeezed footage through a pixel aspect ratio change, I get widescreen footage that looks correct, but the dimensions are still 1920x1080. It just changes the quality of the pixels, not the numbers. If I really am getting 2554 somehow natively I would think there is a distinct advantage to unpacking it in that manner and down-rezing. Would simply changing the scale properly in FCPX from a 2k timeline accomplish that result? Or is there another way to properly 'unpack' the footage, whether at the beginning or end of the process? Where do I view this 2554x1080 widescreen footage? How do I get it? You can explain to me in Premiere terms as well.

As far as the order of all of this, I'll be in a suite on Maui with a lot of power, and I am not skilled in relinking or proxy editing. It seems like a lot to put on the NLE to come up to 2k and unsqueeze and encode all in one step at the very end. If we don't bat an eye at the storage requirements and it doesn't slow us down, are you still suggesting a quality loss? I've been viewing dailies as they are squeezed and am used to it, but we'll be projecting during the edit over there and it won't just be me, I think viewing things as close to the final format as possible will help.

Sorry for the length and repetition, but this is very helpful and there isn't that much central information on all of this. Perhaps because in the film world one never 'un-squeezed'...it was just a lens in front of the projector if I understand correctly?

-

We're saying that we're really getting closer to the old 2.35:1 scope than the modern 2.40:1, right, which explains why the plugins that have a 'widescreen' button for non anamorphic footage to be cropped are slightly wider than my 'native' pixel aspect ratio change footage with the adapter. This is 'ok', though, with a feature shot 80% with a 1.33x adapter, there is no reason to use anything else than it's 2.36:1 or 2.37:1 ratio, right? Just crop the non-anamorphic footage properly? As long as my horizontal dimensions are correct, any projector will take the footage?

Absolutely. I think there's actually two different paths you could take for the DCP though. You could just crop your non-ana footage to 2.36:1 and mix it in with the anamorphic and have this centered on what appears to be the standard "full container" 2K DCP frame size of 2048x1080 and you'll be projecting a letterbox image. There are also "scope" versions of the spec, it seems, where you could incur a very slight top and bottom crop on the un-squeezed anamorphic footage to 2048x858 and match this crop on your non-ana footage and write this 2.39:1 version to the DCP spec filestream.

Most of the generic, pre-built anamorphic options in most software are likely to just assuming a 2X ratio, since that's the most common being shot (Panavision, et al). I'm sure Century Optics and Panasonic, in their wildest dreams, never thought their original 16:9 adapters were going to be used this far in the future, on cameras so different from the 4:3 DV cameras for which they were originally intended. It's just by happenstance that we now have native 16:9 cameras that turn them into a more pleasingly cinematic aspect ratio and it's just luck that this approaches the most common format.

I'd expect later on (hopefully) when we see built-in anamorphic handling the software is aware of and gives you a choice of everything from 1.25x/1.33x/1.5x/1.75x/2x to cover most all of the bases, since it should be no more of a burden to handle one more than the other.

Anyway, it didn't click that you were also conforming non-anamorphic. That does create a slightly different wrinkle but if the ratio of footage is as you're saying, I'd "blow up" the non-anamorphic stuff and sharpen and then deal with an anamorphic workflow as if it were all shot that way rather than conform down to the lesser spec footage. That way your scope footage stays 100% quality and your workflow stays as low impact as possible.

...

Yes, when you work with the correct pixel aspect ratio the frame size doesn't actually change, it's just a flag that tells the software how to display the footage. Similarly, back in the DV and other 601 days folks were working with 720x480 frames that had a 0.89 pixel aspect ratio which took the 1.42:1 file and created a 1.33:1 frame. You never actually conformed the image to 640x480 unless you intended for it to be viewed without distortion on a computer or in print. I couldn't tell you why they decided to standardize on a non-square pixel but those who chose to ignore this fact would invariably, at some time, wonder why their circles were no longer circles.

When you get to the step where you down-rez to the 2048 width, final DCP files, handling the pixel aspect ratio is more or less incidental because you're, ultimately, squeezing the vertical 1080 portion of the frame and only slightly expanding the 1920 portion of the frame. The net result is a sharper, filtered result than if you had first expanded out to 2554 and then proportionally scaled down to a 2048 width. Not to confuse you, just trying to be thorough, there is software out there that concatenate all transformations that the user applies to footage as a series of steps into one which would save the user from themselves and give you the same quality as the one-step process to get to "flat" 2048 DCP frames (they do this with color operations applied as a series of serial steps too) I just don't know which apps, besides Nuke, are this smart under the hood.

...

With disk at no shortage and horsepower to spare, I dunno. I'm just thinking that keeping it anamorphic through and through, and then making various specifically targeted non-scope versions keeps you flexible. Once you arrive at your graded, 1920x1080 anamorphic master, ideally as something like ProRes 444, then it's just a matter of re-running that through anything that can use one of the DCP plug-ins and scale at the same time to arrive at your 2K. Later you'd be able to do the same to generate a BD version or DVD version, all of which have different colorspaces and frame sizes to conform to and getting to any one of them your lovely anamorphic footage is only ever transformed and filtered one time, ever, for each targeted version.

That said, if you're paying for suite time and have an operator that just isn't familiar with anamorphic and so they're just going to be distracted the whole time by their feelings of, "am I breaking it? am I doing this right?" then maybe a conform of everything prior to editorial to un-squeezed 2K wouldn't be so bad. Their not being comfortable costing you more money doesn't help anyone.

If they really have horsepower to spare it might be worth seeing if they could handle 2554x1080 frames and you master all the way through with that. There are slight advantages to doing it this way (*) but the footage becomes really heavy.

Anyway, that's just my thoughts. I could be missing something somewhere.

-

- working to such a large, unsqueezed frame means you could apply non-anamorphic grain at this resolution which could lend the "feeling" of additional detail, where otherwise you'd have the standard phenomenon, in projection, of horizontally stretched grain. You could also, though I haven't tried myself, get a slightly different, maybe better, maybe no worse, result from doing your sharpening and/or chroma filtering at the un-squeezed frame size versus the squeezed frame size, plus the addition of the non-squeezed grain. We're talking really subtle differences though.

-

-

@BurnetRhoades, thank you again, lots of really good information.

The one bit I'm not following is how you get to 2554x1080 considering the below? What's the workflow to unsqueeze and change the frame size at the same time? My inclination is to go the final route you mention but I haven't followed how to create those sized frames without a kind of weird two step up-res.

"Yes, when you work with the correct pixel aspect ratio the frame size doesn't actually change, it's just a flag that tells the software how to display the footage. Similarly, back in the DV and other 601 days folks were working with 720x480 frames that had a 0.89 pixel aspect ratio which took the 1.42:1 file and created a 1.33:1 frame. You never actually conformed the image to 640x480 unless you intended for it to be viewed without distortion on a computer or in print. I couldn't tell you why they decided to standardize on a non-square pixel but those who chose to ignore this fact would invariably, at some time, wonder why their circles were no longer circles."

-

The one bit I'm not following is how you get to 2554x1080 considering the below? What's the workflow to unsqueeze and change the frame size at the same time? My inclination is to go the final route you mention but I haven't followed how to create those sized frames without a kind of weird two step up-res.

Two methods I'd consider:

1) After Effects *- load the footage and drop it on the "Create New Composition" button.

*- ensure your composite is in the appropriate color space and 32bit

*- open the composition properties dialog and change its resolution from 1920x1080 to 2554x1080

*- select your clip (which now should appear "pillar boxed"), go to the Transformation options and you'll see the option to scale it to the composition size. I'd select the option to make it fit the composition horizontally just to be anal

*- now your footage is scaled so you output it to at least ProRes 422HQ

2) MPEG Streamclip *- load the footage

*-export to Quicktime

*-select at least ProRes 422HQ and an output size of 2554x1080

...the scaling to its un-squeezed, full-resolution size isn't tricky at all and only requires one transformation step. Load>Scale>Save. Badda-Bing.

-

@BurnetRhoades, I think I begin to understand. I didn't follow the uprez to 2554 if 2554 was supposed to be some kind of 'native resolution'...but 1920 x 1.33 = 2554 so it's like we're building the frame and filling it in with the right shaped pixels, or something? Interesting stuff, at any rate, will give it a whirl.

-

Yeah, that's the un-squeezed frame size keeping the full height of the native frame. That's why, for the same aspect ratio, there's more information in an anamorphic image than shooting flat and cropping.

When you do a reduction you're still getting a better quality widescreen image compared to cropping but the difference isn't huge. Then you're retaining the aesthetic quality of the glass and how different it looks compared to flat photography cropped to the same aspect ratio.

-

Thank you for everything @BurnetRhoades, as soon as Creative Cloud would cough up After Effects last night I gave it whirl as per your instructions and things became a lot more clear when I was able to watch the process, no vertical upres, then simply hit a button and it un-stretches into the 2554. Makes sense. Export the clip and drop it into a 2k timeline in FCPX and we're suddenly playing ball. (weird that unless I install premiere I only seem to be able to render out from AE in the Animation codec?!)

Of course I'm probably not going to be able to do it this way for 18 hours of footage, one clip, one comp at a time. I feel like compressor should be able to accomplish this, and if not perhaps motion, and if Motion perhaps it could be rigged to work as an effect in FCPX. Whether that will maintain as much quality as a 32bit After Effects comp remains to be seen.

-

One interesting little note...above when I wrote about running through compressor at 1.33 pixels and getting something taller than my changing vertical scale to 76%, or using the Cinelook 'widescreen' plugin and we thought that might make some sense, I think I was wrong. This 2554x1080 file is almost identical to the plugin, it translates to a 75% scale in FCPX (which explains why all the things I've been putting out at 76 have looked ok), which means the plugin guys going for an old film look are probably putting out 2.35:1 and I am getting 2.36:1 out of After Effects. This is good news, and comprehensible to me. Don't tell me I'm missing something, haha!

-

Not a problem, I just hope it ultimately helps y'out.

Yeah, the 18hrs of footage thing is why I'm leaning towards editing with the MTS as usual, then process (whether this means transcoding, un-squeezing, etc.) only the clips actually being used. That'll still be a lot of work but it'll still reduce wasted effort.

Here's where I kinda wish I hadn't abandoned programming. An EDL export from whatever editor you use gives a precise list of every clip actually used, plus in-n-out. Turning that into a batch list to handle all of prep for final grading, at this point, should be pretty easy to do. I was able to swap all references to proxy versions of our feature for full-rez clips going into the grading process with simple search-and-replace tools in TextEdit. This would require being a little more clever than that but not terribly.

-

It does help, it does!

And there appears to be a path to batch processing with compressor. By changing the geometry to 2554x1080 with a pixel aspect ratio of 1.33x, I can duplicate the AE results, and the quality actually looks a touch higher on Compressor. (That maybe just because of this computer, where I only rendered out from AE to animation).

That means I can make a droplet and just drop all stuff on it.

In FCPX, the 2554x1080 files can be dropped into a 2k timeline for editing (just hit 'fill' on spacial conform), or, one can use the 'open in timeline' workaround to get a 2554x1080 compound clip and edit entire sequences that way.

In fact, one can create the 2554x1080 compound clip, drop a 1920x1080 still-squeezed file into that timeline, change the horizontal scale to 80% and approximate the same thing and exports to 2554x1080. Not clear if there is a slight quality loss doing it this way vs doing it in compressor, yet, however.

Exciting stuff! Does present some challenges...noticed my gorilla grain doesn't work properly on a 2K timeline, for instance, I'm sure little things like that will pop up...

-

Might just be my numbers addled brain but I think this process looks better, too, as far as the LA7200, even some of the stuff on the edges I guess I was squishing it a bit, before.

-

Investigate about do the resample in a linear space, this make better results, but AE default interpolation is bilinear :S maybe some Avisynth genie here to do it on a linear gamma?

-

@kellar42 no, no, no...you may have already figured it out by now but at the 2554x1080 frame size the pixel aspect ratio is 1.0 and it's only when it's in squeezed form, on a 1920x1080 frame, that it's a 1.33 pixel aspect ratio.

I can't say 100% for sure but doing transformations inside FCP is ultimately lower quality than Compressor (or Color for that matter). You'd want to export your timeline to one of the other two to actually render and not go straight out of FCP. That's just going on hearsay though, I don't run any of these, I just hear stuff. It's a similar situation with Premiere's engine versus After Effects. It's fine, but the honest answer is the editor isn't the best place to actually render if you're looking to be really anal with the pixels.

-

@BurnetRhoades I know, I know, this ran through my head while doing it as well, but must be a compressor setting quirk, when I change the geometry to 2554x1080 but leave the pixel aspect ratio nothing happens...doesn't un-squeeze, but when I change both it gives me a perfect match of the After Effects footage :/.

Whatever is happening in compressor, once I understand why or get it right, I agree it'll be better than FCPX in the long run.

I almost prefaced my last post with 'I don't understand why this works, but this is what I'm finding...' haha...

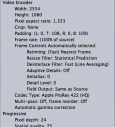

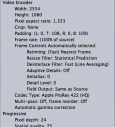

@heradicattor in Compressor linear is listed as 'better' for resizing quality and 'statistical prediction' as best. I've been using the later. Should I not be? I like the compressor results better than AE so far but I thought that might have been the way I exported from AE.

-

For reference, a the compressor settings, and screenshot of a LA7200 file run through them (off-topic but this was before the Moon hack). Screenshot dimensions are not 2540x1080 because I captured them off a retina screen but the point is the aspect ratio is correct, exactly as it would be from AE. Weird, but it's working.

Screen Shot 2013-02-19 at 11.15.34 PM.png534 x 592 - 91K

Screen Shot 2013-02-19 at 11.15.34 PM.png534 x 592 - 91K

Screen Shot 2013-02-19 at 11.16.22 PM.png2880 x 1306 - 5M

Screen Shot 2013-02-19 at 11.16.22 PM.png2880 x 1306 - 5M -

@Kellar42 Try 2580x1080 2,40.1 (2,39) standard pro res 4444

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List24,086

- Blog5,725

- General and News1,397

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,395

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm102

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras143

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,343

Tags in Topic

- anamorphic 153

- fcpx 32

- import 15

- la7200 10

- 5d2rgb 1