It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

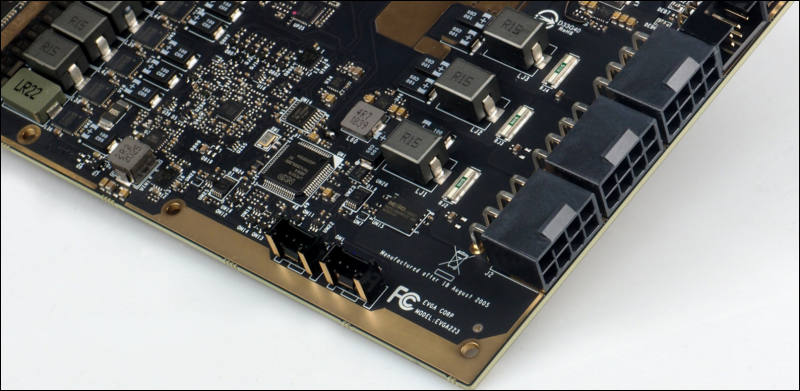

sa14523.jpg727 x 572 - 47K

sa14523.jpg727 x 572 - 47K

sa14524.jpg742 x 540 - 63K

sa14524.jpg742 x 540 - 63K

sa14525.jpg667 x 562 - 54K

sa14525.jpg667 x 562 - 54K

sa14527.jpg800 x 391 - 62K

sa14527.jpg800 x 391 - 62K

sa14528.jpg800 x 533 - 92K

sa14528.jpg800 x 533 - 92K -

It is one big problem with new RTX cards

Ethereum mining rates are

- RTX 2080 - 40 Mh/s

- RTX 3080 - 115 Mh/s.

In Asia around 90% of all cards go to miners now.

-

As told above it will be in very short supply.

sa14374.jpg554 x 489 - 39K

sa14374.jpg554 x 489 - 39K -

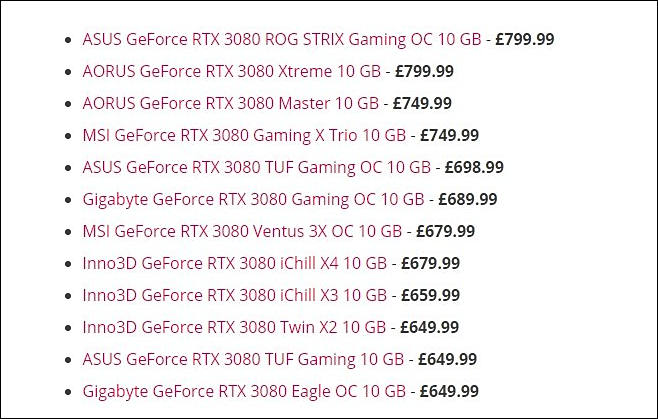

HDMI support

Cards support HDMI 2.1 48GBit/sec and support DSC compression. Maximum supported res is 8K 60Hz with active HDR.

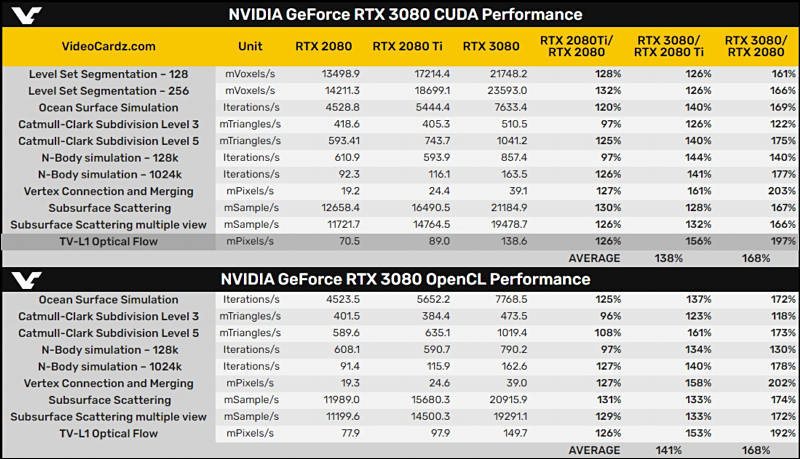

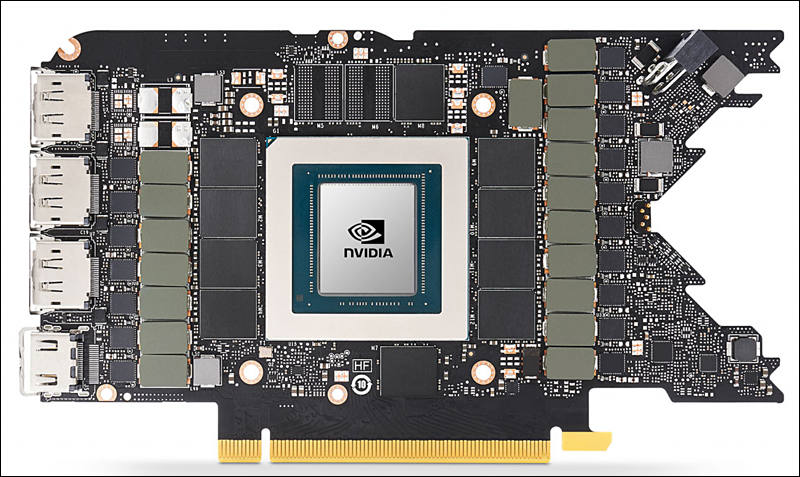

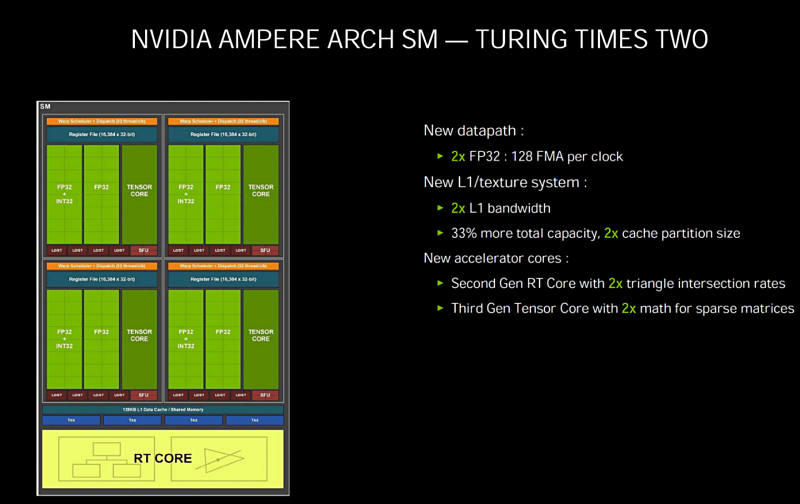

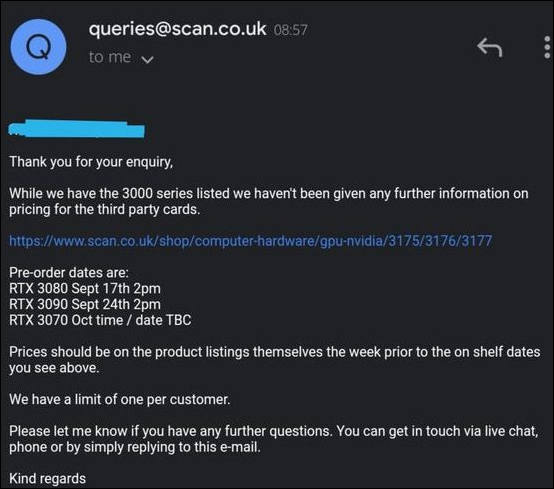

CUDA CORES

One of the key design goals for the Ampere 30-series SM was to achieve twice the throughput for FP32 operations compared to the Turing SM. To accomplish this goal, the Ampere SM includes new datapath designs for FP32 and INT32 operations. One datapath in each partition consists of 16 FP32 CUDA Cores capable of executing 16 FP32 operations per clock. Another datapath consists of both 16 FP32 CUDA Cores and 16 INT32 Cores. As a result of this new design, each Ampere SM partition is capable of executing either 32 FP32 operations per clock, or 16 FP32 and 16 INT32 operations per clock. All four SM partitions combined can execute 128 FP32 operations per clock, which is double the FP32 rate of the Turing SM, or 64 FP32 and 64 INT32 operations per clock.

Doubling the processing speed for FP32 improves performance for a number of common graphics and compute operations and algorithms. Modern shader workloads typically have a mixture of FP32 arithmetic instructions such as FFMA, floating point additions (FADD), or floating point multiplications (FMUL), combined with simpler instructions such as integer adds for addressing and fetching data, floating point compare, or min/max for processing results, etc. Performance gains will vary at the shader and application level depending on the mix of instructions. Ray tracing denoising shaders are good examples that might benefit greatly from doubling FP32 throughput.

Doubling math throughput required doubling the data paths supporting it, which is why the Ampere SM also doubled the shared memory and L1 cache performance for the SM. (128 bytes/clock per Ampere SM versus 64 bytes/clock in Turing). Total L1 bandwidth for GeForce RTX 3080 is 219 GB/sec versus 116 GB/sec for GeForce RTX 2080 Super.

Like prior NVIDIA GPUs, Ampere is composed of Graphics Processing Clusters (GPCs), Texture Processing Clusters (TPCs), Streaming Multiprocessors (SMs), Raster Operators (ROPS), and memory controllers.

The GPC is the dominant high-level hardware block with all of the key graphics processing units residing inside the GPC. Each GPC includes a dedicated Raster Engine, and now also includes two ROP partitions (each partition containing eight ROP units), which is a new feature for NVIDIA Ampere Architecture GA10x GPUs. More details on the NVIDIA Ampere architecture can be found in NVIDIA’s Ampere Architecture White Paper, which will be published in the coming days.

Lack of progress with encoders

For RTX 30 Series, we decided to focus improvements on the video decode side of things and added* AV1 decode support. On the encode side, RTX 30 Series has the same great encoder as our RTX 20 Series GPU. We have also recently updated our NVIDIA Encoder SDK. In the coming months, livestream applications will be updating to this new version of the SDK, unlocking new performance options for streamers.

By idea for NLE GPU processing performance must rise 70-80% compared to 2080 Ti (for RTX 2080).

sa14360.jpg668 x 779 - 95K

sa14360.jpg668 x 779 - 95K -

No one knows. As all also highly depend on that you do.

Resolve have extremely inefficient architecture, especially GPU part that is aimed at complex unusual workflows, and for typical simple grading it runs at around 1/4-1/5 of possible speed if they did it all proper and using optimized code.

Once I had talk to one developer. It is industry chit chat talks that BM has agreement with some other NLE manufacturers on where they don't go. As if they wanted and aimed at much more beginners and focused on real optimization on FCP X level - they could wipe out all but Adobe.

-

So upgrading form 1080ti to rtx3080 is going to make significant improvement for resolve studio?

-

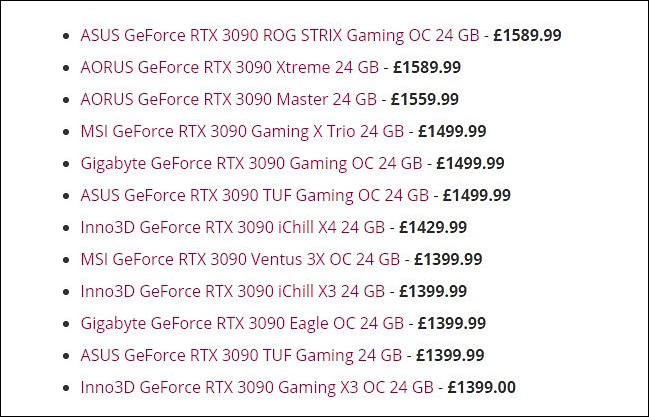

Some UK prices

sa14352.jpg649 x 417 - 59K

sa14352.jpg649 x 417 - 59K

sa14353.jpg658 x 419 - 54K

sa14353.jpg658 x 419 - 54K -

Industry source told that post-launch there will be "no stock will be available till the end of the year". The first wave of cards is said to be small, very, very small -- possibly the smallest launch in many years.

Stock will be extremely low for the next couple of months. Why? Samsung 8nm yields are bad at this point, NVIDIA might not want to make too many before the yields improve.

-

No, they won't. They doubled some ALU parts, but not all, all the buses and everything else are same as well as may be some have parts of shader cores.

Rumor is that actual performance gain is from 10% up to 25% per CUDA core in real tasks.

If you check - it was last minute marketing decision as all marketing materials even last week referenced proper amount of CUDA cores.

-

@Vitaliy_Kiselev That FP32 numbers are confusing... does it mean those cards will instantly double in performance when used for Davinci Resolve (+NeatVideo), which rely heavily on FP32 calculations?

-

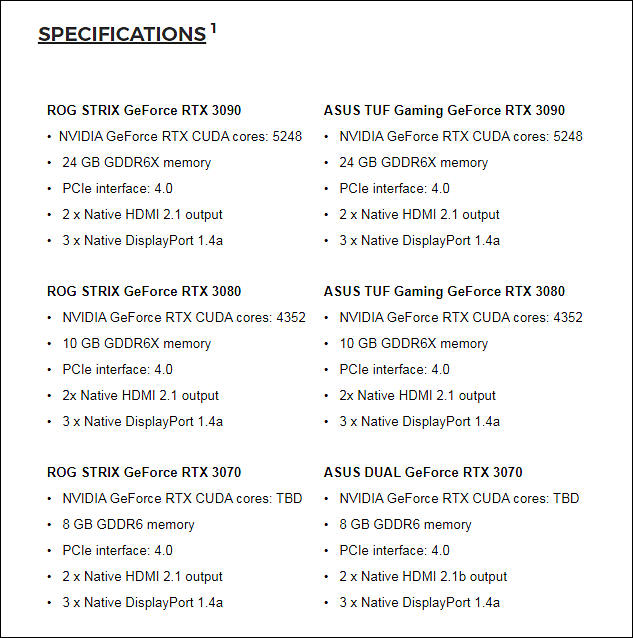

We have some real mess with CUDA cores counting, as in official ASUS PR it is 1/2 of Nvidia marketing number

And here is the explanation (yes it is Nvidia PR stunt!)

I have seen some people confused by Ampere’s CUDA core count. Seemingly out of nowhere, NVIDIA has doubled the core count per SM. Naturally, this raises the question of whether shenanigans are afoot. To understand exactly what NVIDIA means, we must take a look at Turing.

In Turing (and Volta), each SM subcore can execute 1 32-wide bundle of instructions per clock. However, it can only complete 16 FP32 operations per clock. One might wonder why NVIDIA would hobble their throughput like this. The answer is the ability to also execute 16 INT32 operations per clock, which was likely expected to prevent the rest of the SM (with 32-wide datapaths) from sitting idle. Evidently, this was not borne out.

Therefore, Ampere has moved back to executing 32 FP32 operations per SM subcore per clock. What NVIDIA has done is more accurately described as “undoing the 1/2 FP32 rate”.

As for performance, each Ampere “CUDA core” will be substantially less performant than in Turing because the other structures in the SM have not been doubled.

On pascal each SM contained 64 cuda cores, and each cuda core was a FP32 core. On turing, each SM contained 64 cuda core, and each cuda core was considered 1FP32 core + 1INT32 core. Now they doubled the FP32 cores per cuda core, but didnt mention anything about INT32 cores, so we supose now that each cuda core is 2FP32 + 1INT32, or if they doubled INT32 as well then it would be 2FP32 + 2INT32. The Tflops calculation were always based on FP32 anyways, so using the doubled cores is correct for the Tflops measurement since rasterization was always done on fp32. They also didnt release any info about register sizes, cache, rops, etc, so i believe the only doubled thing is the FP32 and the rest a smaller upgrade, otherwise it would be basically 2x turing lol.

They probably only doubled the FP32 logic because its the most used on games

sa14325.jpg633 x 638 - 99K

sa14325.jpg633 x 638 - 99K

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,911

- Blog5,718

- General and News1,330

- Hacks and Patches1,148

- ↳ Top Settings33

- ↳ Beginners254

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,342

- ↳ Panasonic984

- ↳ Canon118

- ↳ Sony154

- ↳ Nikon95

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm98

- ↳ Compacts and Camcorders295

- ↳ Smartphones for video96

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,959

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors190

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,407

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,577

- ↳ Follow focus and gears93

- ↳ Sound496

- ↳ Lighting gear313

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff271

- ↳ Power solutions83

- ↳ Monitors and viewfinders339

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear559

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,314