It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

I think I'm misunderstanding something about the virtues of v-log and flat profiles. If someone could explain this to me, I'd be grateful.

This is what I think is going on. V-log delivers a very flat image, where the darkest elements are greyish, and the lightest elements are bright, but not completely blown out. This enables color graders to "stretch" those values, and thus, have some play with the midrange gamma. But doesn't this mean that the color palette leaves out a lot of value?

I don't mean that it leaves out the highs and lows. It seems to me that if you take a narrow range of luminance, and scale it to a larger range, you don't get pixels with in-between values. Say I have a photo with luminance ranges from 0 to 100, and I scale that to 0-200. Thus, each luminance value would be doubled: 1 becomes 2, 3 becomes 6, 42 becomes 82, etc.) There'd be no real increase in the range of luminances. And in some circumstances, you'd get banding if the jump is too big.

And if this is the case., then why compress the luminances into such a narrow range? Why not scale it to the camera's full luminance range, and preserve as much of the "in -between" luminances as possible? If your camera has a range of, say, 1-1000, then why reduce the image to 300-700?

As I said, I don't think I'm understanding this correctly. Anyone got a good FAQ?

-

This is from their PDF, and they use again "reflection" term instead of simple "sensor raw value".

Because it's not the raw sensor value. It's been debayered, scaled, white balanced, and transformed into V-Gamut. Usually it's just called 'scene linear'.

-

What some people are calling IRE is really just the percentage on the RGB scale, where (0, 0, 0) is the black point and (100, 100, 100) is the white point. We use percentages because there are different ways of encoding RGB values, and we want to specify the RGB values without regard to any particular encoding. The code values of black and white will be different depending on what RGB encoding you are using. E.g., Rec.709 specifies a couple of ways of encoding RGB values (8-bit and 10-bit Y'CbCr). H.264 adds another (video_full_range_flag). HDMI has a few different encodings. There are multiple common RGB encodings, too, like floating point or integer with different ranges. But every one of those RGB encodings defines the code value for black and the code value for white and the ranges used, so that code values can be translated to and from an RGB percentage.

For cameras that record internally to a log color space, the RGB encoding is almost always Rec.709. Sony S-Log, Canon Log, Arri Log C, and Panasonic V-Log use Rec.709. GoPro Protune is the one exception I know of.

IRE is a scale for voltage in analog video systems. It is absolutely well defined, in IEEE standard 205-1958. IRE is defined in terms of the blanking level and the white level. It can be related to percentages on the RGB scale, if you also say which analog standard you are using. For analog HD video standards, IRE is equal to the RGB percentage. For analog SD video, they are not equal, depending on which country's analog standard you use. But these days everything's digital, so it's rather pointless to talk about IRE. The different manufacturers have not been consistent about dropping the outdated term.

-

Such limitation has absolutely nothing to do with DR of the sensor or footage. It affects only tonal properties.

Exactly, but the hype for DR has become so one-dimensional that many have never even heard of tonal range, much less appreciate the tradeoffs involved in maximizing DR at its expense. Panasonic is marketing the GH4 upgrade both as a consumer product for those who desire Vlog bragging rights (e.g. versus Sony fanboys), as well as a tool for those who will actually use it as intended in a 10-bit LUT-based post production workflow.

-

V-log will be great for people using external 10 bit recording. 8 bit video has been a problem since the early 1990's when 10 bit became the accepted minimum for doing video effects work to avoid visual artefacts appearing during compositing many passes on digital tape. This whole issue has more to do with bit depth than where the log recorded black and white levels appear on an IRE scale.

BTW IRE is a simple 0-100% scale of bit 16-235 (8 bit) or bit 64-940 (10 bit) as defined by the rec601 and rec709 broadcast standards.

Most manufacturers are trying to move forward with new standards but must also remain compatible with existing broadcast standards.

I have done extensive tests with my GH4 using the 16-235, 16-255 & 0-255 settings to see how these settings were interpreted by Avid Media Composer, FCP7, FCPX, Adobe Premiere & Autodesk Smoke, all on Mac OSX. The only setting which mapped levels correctly on import to all those applications was 16-235. Theory is fine for discussion but nothing beats real-world practical testing with technical knowledge and accurate measuring instruments.

I will be VERY interested to see how 8 bit V-log holds up when pushed hard in colour grading software. Until I test it, it's just talk.

-

With V-Log-L on a 8-bit codec, the decoded output data will be limited to the range of 32-182, only about 7-bit precision. Regardless of how the YUV data is internally encoded within the H.264 macroblocks, the decoder will not produce any more image detail than that.

If can be only explained by some utter incompetence of people who made it or by direct order of managers who wanted somehow to degrade it.

I seriously doubt the GH4 can squeeze 12-stops of dynamic range without visible degradation into something closer to 7-bit precision, regardless of the camera's increased bitrates.

Such limitation has absolutely nothing to do with DR of the sensor or footage. It affects only tonal properties.

Same for bitrate increases - main advantage lie in the high frequencies of color components.

-

As one of the explanations of this option that I found is that it is flag that tells H.264 decoder to scale actual data that are in 16-235 range into 0-255 range.

Yes, and in the case of V-Log-L encoding, it's the limits it imposes on the scaled 8-bit output of the decoder that degrade the quality of the image. With V-Log-L on a 8-bit codec, the decoded output data will be limited to the range of 32-182, only about 7-bit precision. Regardless of how the YUV data is internally encoded within the H.264 macroblocks, the decoder will not produce any more image detail than that.

In my experience, 8-bit H.264 was just barely detailed enough to handle the GH2's 10-stop dynamic range with high image quality, but that was after the camera was hacked to record at 100Mbps. I seriously doubt the GH4 can squeeze 12-stops of dynamic range without visible degradation into something closer to 7-bit precision, regardless of the camera's increased bitrates.

-

The GH4 supports this feature with a user-selectable option. When decoded, a full-swing H.264 stream will produce 8-bit data ranging from 0-255.

Did you made the test looking inside decoder? As one of the explanations of this option that I found is that it is flag that tells H.264 decoder to scale actual data that are in 16-235 range into 0-255 range.

Btw range restriction came from analog era (as well as stupid IRE). In digital it makes exactly zero sense, but people who developed standards are mostly old fashioned guys.

-

Yes, 16-235 studio-swing is the default standard for 8-bit Rec 709, but the standard also supports optional bitstream metadata flags that can be used to indicate 0-255 full-swing data. The GH4 supports this feature with a user-selectable option. When decoded, a full-swing H.264 stream will produce 8-bit data ranging from 0-255.

-

Btw

Rec. 709 coding uses SMPTE reference levels (a.k.a. "studio-swing", legal-range, narrow-range) levels where reference black is defined as 8-bit interface code 16 and reference white is defined as 8-bit interface code 235. Interface codes 0 and 255 are used for synchronization, and are prohibited from video data. Eight-bit codes from 1 through 15 provide footroom, and can be used to accommodate transient signal content such as filter undershoots. Eight-bit interface codes 236 through 254 provide headroom and can be used to accommodate transient signal content such as filter overshoots

Note that in latest standard for UltraHD same approach is still used (all range is not usable).

-

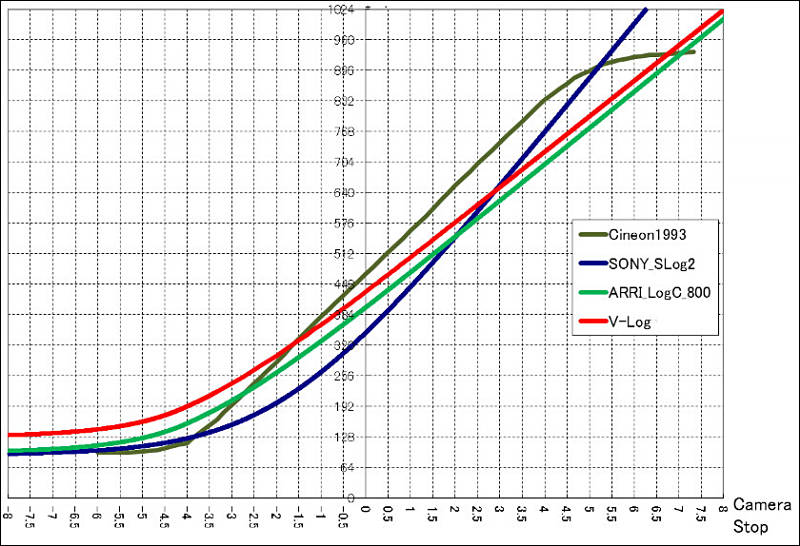

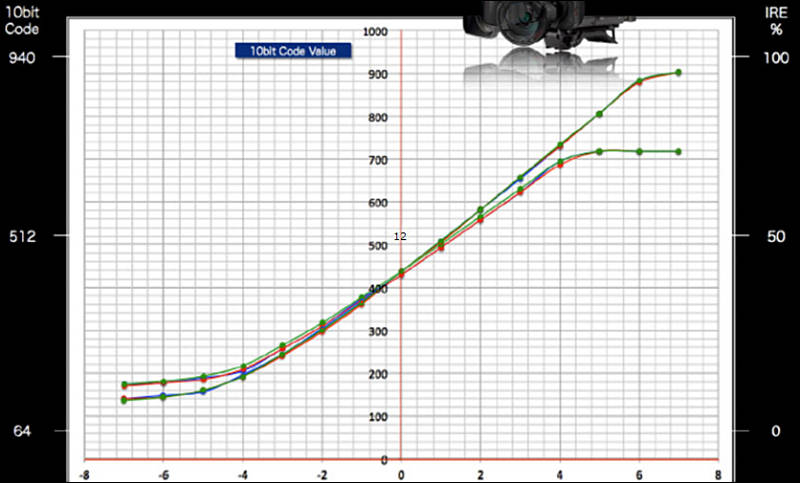

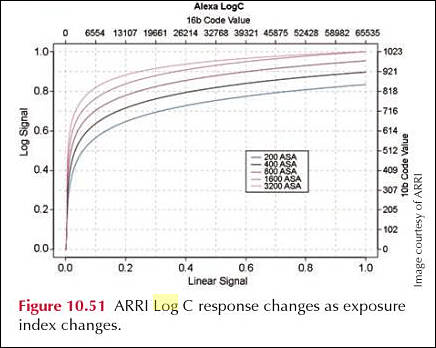

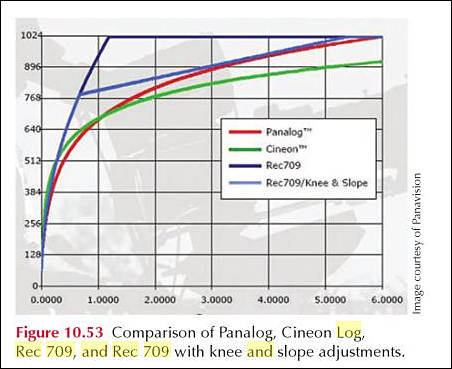

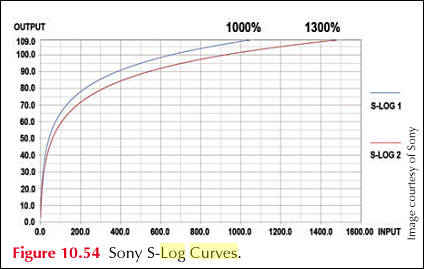

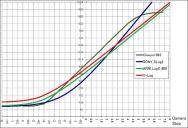

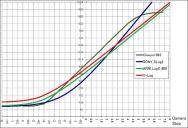

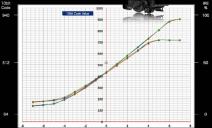

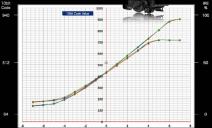

Some more charts (they look different because of x axis difference)

sales15.jpg436 x 348 - 28K

sales15.jpg436 x 348 - 28K

sales16.jpg452 x 369 - 33K

sales16.jpg452 x 369 - 33K

sales17.jpg424 x 269 - 23K

sales17.jpg424 x 269 - 23K -

sales13.jpg800 x 546 - 97K

sales13.jpg800 x 546 - 97K

sales14.jpg800 x 483 - 61K

sales14.jpg800 x 483 - 61K -

The function for converting from linear signal to V-Log data is as follows. With linear reflection as “in” and V-Log data as “out”,

out = 5.6*in+0.125 (in < cut1 )

out = c*log10(in+b)+d (in >= cut1 )

cut1 = 0.01, b=0.00873, c=0.241514, d=0.598206

This is from their PDF, and they use again "reflection" term instead of simple "sensor raw value".

-

On a camera with an 8-bit codec like the GH4, you would have a scale from 0-256. That means highlight clipping would be recorded at around 180, with no image details recorded in the range 181-255.

It make really no sense, at all.

As only marketing idea (to make it look dull and super flat) can lead to this. Or some utter failure that Panasonic proponents want to hide under smart looking terms.

-

IRE is not rubbish. It measures luminance values in the decoded video signal which allows us to measure display brightness without referring to bit depth.

OK, how about using simple percentage in this case (0-100%) ? As all IRE complications came from reality we had with analog signal.

-

IRE is not rubbish. It measures luminance values in the decoded video signal which allows us to measure display brightness without referring to bit depth.

-

Is it possible that when in V log mode, GH4s exposure meter and zebras will be programmed to recalibrate for accurate V log exposure metering as part of the new firmware? I am thinking for example of BMPCC - in camera zebras are calibrated for it's own film log right? and so I expose shy of zebras clipping at 100% for all important highlights and this works fine when there is sufficient light, gives me maximum safe exposure. Why should GH4 V log be so much more difficult to use? Guess time will tell where the zebras need to be set to protect important highlights

-

Bullet point style. Do this -> then this. Expose to this. Etc. End the IRE rubbish. Just simple rules.

-

Shooting log rules topic needed.

LOL

-

@VK we need to fix this problem. Shooting log rules topic needed.

-

If you overexposure the footage beyond this point, its dynamic range will no longer match the calibration of the LUT and the results will be inaccurate, particularly in the non-logarithmic 4-stops of shadow detail range. Used in this way, V-Log-L becomes a subjective flavoring, like any other built-in profile.

One thing I am amazed is how much stuff can be made from extremely simple math thing. If you start adding um smart words.

-

Wait and get your hands on V-Log L for the GH4. Shoot an object in V-Log L at ISO 3200, with the exposure adjusted so that the object is just below clipping in the V-Log L output. Shoot the same object as a raw photo at ISO 3200, with the same exposure. Use RawDigger or some such tool to analyze the raw image. Will the object be just below clipping in the raw photo? What's the highest ISO setting that will make the object be below clipping in the raw photo?

Well, it was not me who stated all this, hence - do the tests.

And throw out ISO from all this. Just fix ISO (low is preferable due to obvious reasons) and do not touch exposure. Target of this tests is to get math function. You do not need to adjust anything.

-

[With V-Log-L], if we expose a stop or two more brightly than we normally do, those extra stops shift down and enable us to gain more detail in the midrange and lower ranges.

As with all log profiles intended for use with a calibrated LUT, V-Log-L is designed to be used at exactly one specific exposure - with highlight clipping at 79 IRE. If you overexposure the footage beyond this point, its dynamic range will no longer match the calibration of the LUT and the results will be inaccurate, particularly in the non-logarithmic 4-stops of shadow detail range. Used in this way, V-Log-L becomes a subjective flavoring, like any other built-in profile.

-

Perform this test, and you will understand the connection between V-Log L and sensor gain:

Wait and get your hands on V-Log L for the GH4. Shoot an object in V-Log L at ISO 3200, with the exposure adjusted so that the object is just below clipping in the V-Log L output. Switch the photo style to Standard and shoot the same object as a raw photo at ISO 3200, with the same exposure. Use RawDigger or some such tool to analyze the raw image. Will the object be just below clipping in the raw photo? What's the highest ISO setting that will make the object be below clipping in the raw photo?

-

Full well capacity doesn't change because of V-Log L. Panasonic was not throwing away tons of highlight range captured by the sensor in the days before V-Log L.

Well, as they openly state, they did throw out stuff, just read release notes.

Why do you think V-Log L is not available at the same base ISO as standard photo styles?

What exactly is "base ISO" in your understanding?

Because for the same ISO setting, it uses a lower gain, and of course there is a minimum gain. I don't like to debate with you. Just think about it.

I think you are utterly confused. It is not a debate, just describing how thing actually works, it is not magic.

Actual pixel at sensor collects charge as result of photons hitting it. Hence it depend only on exposure time (with same scene, lens and all). Sensor has analog gain (>=1.0) before ADC. As any amplifier is not ideal, it adds some noise (to the heat noise and some other sensor noise). And ADC produce raw values (they are also being corrected to reduce fixed pattern, and other things).

VLOG or any LOG format is just another function transforming raw values into image. Mathematically it is different, but it is works on same place as all the normal modes.

-

How does V-Log L magically extend the highlight range without turning down the gain? Full well capacity doesn't change because of V-Log L. Panasonic was not throwing away tons of highlight range captured by the sensor in the days before V-Log L. Why do you think V-Log L is not available at the same base ISO as standard photo styles? Because for the same ISO setting, it uses a lower sensor gain, and of course there is a minimum gain. I don't like to debate with you. Just think about it.

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,992

- Blog5,725

- General and News1,355

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,367

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm101

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,319

Tags in Topic

- v-log 14