It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

ACES is a color encoding system and workflow for motion pictures. It enables accurate exposure compensation and color balancing, and lets you easily match between shots and cameras.

I've posted a tutorial video and OpenColorIO configurations for Sony Vegas Pro: http://www.jbalazer.com/aces-in-sony-vegas

Intro and Tutorial video:

I've created new IDTs for the Panasonic GH2 and GoPro Protune. IDTs for Canon Log and Panasonic V-Log can also be added, but I need test footage to make sure that the levels are mapped correctly.

This Panasonic GH2 test video demonstrates the effects of exposure compensation in ACES, and shows the useful range of exposure settings for this camera. It also demonstrates that you don't need a log camera to take advantage of ACES. Even a regular Rec.709 camera can be used well with ACES, provided that you have an IDT that accurately transform the video into the linear color space. A log camera has more exposure latitude, of course.

-

@balazer: Thanks for the projects! Actually, what you sent me is what I've already tested. The three color bars look the same, maybe only the .exr has the red color very little pinkish. I rendered a quick tests 2-in-1: with your settings and with my settings. The result is almost the same (if I don't say that mine looks better). Not sure if I understand this process and its theory, but when I compensate the image differences between the different ACES , view transforms and color spaces with the plug-in, then I can get one and the same final result. Confusing...

-

Actually the bright parts are not clipped, I'm the one who decreased them a little to get softer filmic image, not like what GH2 produces, and at the same time as you can see, they are more detailed after my "mess". And I tried absolutely every available render color spaces options, really not satisfied! Whatever the theory says, I did everything by how it looks. Of course, this was a fast simple test without lights, composition, etc., but at the same time I'd be very interested in your way of processing and rendering the original GH2 video file I showed above. If you want, I can upload the video straight from GH2, ok?

Yes, I tried at first with gamma 2.222, but definitely don't like the result, don't aks me to show it to you.

-

Set the compositing gamma to 2.222, not 1.00. Render to a video file color space, not a monitor preview color space. I suspect that the filter you're using does not preserve color values above 1.0, and that's why the bright parts are clipped. ACES uses color values well above 1.0. ACEScc uses 0-1.

What input color space did you use? That was made using your FilmVision Pro filter? Does the filter preserve values above 1.0?

-

Well, what I did is:

In Sony Vegas Project properties: 32-bit floating point (full range), 1.000 (Linear).

View transform: ACES RRT (Rec.709 HDTV monitor preview).

Then: filters applying.

Render: Sony 10-bit YUV ACES RRT (Rec.709 HDTV monitor preview).

Render for YouTube: x264.

The original GH2 video:

Frameshots below for comparison:

original.jpg1920 x 1080 - 199K

original.jpg1920 x 1080 - 199K

processed.jpg1280 x 544 - 55K

processed.jpg1280 x 544 - 55K -

That doesn't look right at all. Can you upload the out-of-camera video? What input color space did you use? What view transform, and what rendering color space? Did it look right in your preview window, or was it dark like in that YouTube video?

You used the FilmVision filter? Standard filters mostly don't work in ACES. You can use ACEScc instead.

Start with the basics. Don't be adding filters until you can get the basic workflow working.

-

Creating an IDT from scratch is incredibly complicated. I don't plan to make a tutorial for that, but I can work with people to get more cameras profiled.

Thankfully the generic Rec.709 input transform is usually good enough. Here are two test videos from the Panasonic GH2 illustrating how.

This test uses a custom GH2 IDT: (repeated from the original post)

This test uses a generic Rec.709 IDT:

The Rec.709 input transform is the inverse of the Rec.709 output transform. So when you use Rec.709 as the input color space and as the output color space and you don't make any adjustments to the video in ACES, the video's color values are passed through without modification. That's what you see in the second video when the exposure setting is +0. When exposure compensation is applied, it amplifies the differences between the camera's output curve and the Rec.709 color space curve, showing greater differences as more compensation is applied. These differences depend on which camera you're using. At least with the GH2, the two curves seem to be fairly close to each other, allowing for about one stop of adjustment in either direction before the differences become too large. One stop is about all you need for real-world shooting. It's fairly easy to get the exposure within one stop of ideal just by looking at the camera's display while you shoot. And you can easily get the camera's white balance setting within one stop of the ideal in each channel just by eyeballing the image.

Of course it's desirable to have an IDT made specifically for your camera. A custom IDT removes the uniqueness of your camera's contrast curve, giving you the ACES RRT contrast curve consistently in all your output. A generic IDT doesn't do that. A generic IDT preserves the look of your camera's video, for good or for bad.

Here's the bottom line: it's worth it to use ACES even when you don't have a custom IDT, because exposure compensation and color balancing are far easier to do in ACES than to do directly on the Rec.709 color values. In ACES, you just drag some sliders. It's almost as easy as setting the exposure and color balance in the camera, and the results are almost as good. Working directly on Rec.709 video, you'll spend lots of time with color curves and high/mid/low color correction filters, and still your results won't be as good. When you get the midtones to look right, the highlights and the shadows will be off. When you finally get one scene looking the way you want, it won't be right for another scene. Rec.709 is optimized for the transmission of display-ready visual information. It just isn't the right color space for making adjustments that correspond to the physical world.

But don't take my word for it. Download a free trial of Sony Vegas Pro and try it for yourself.

-

Could you do a tutorial on how to make an IDT? That would be a nice compliment to your existing tutorial.

-

@producer, please study the tutorial before you post any sample videos to this topic.You achieved an interesting artistic effect, but you were not using ACES how it is meant to be used. Vegas didn't come with an IDT for your camera. You absolutely need that.

-

@balazer: Glad to know that someone is paying attention to the ACES. I experiment with ACES and GH2 since long time ago, here is my one of my tests (1080p):

I will try out your modified ACES on GH2 and will show the results.

-

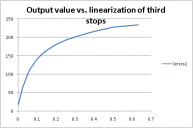

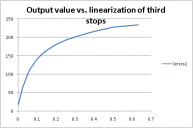

To make the GH2 IDT basically I profiled the camera's luma response. I exposed the sensor to varying amounts of light and measured the luma response, and computed a linearization curve from that. Then I calculated an sRGB to ACES gamut conversion matrix. It's possible to do for other cameras also.

Here are plots of my measurements for the Panasonic GH2.

Panasonic_GH2_luma_response_log_plot.png528 x 336 - 6K

Panasonic_GH2_luma_response_log_plot.png528 x 336 - 6K

Panasonic_GH2_luma_response_plot.png517 x 343 - 6K

Panasonic_GH2_luma_response_plot.png517 x 343 - 6K -

Excellent tutorial! Thanks so much for posting this. One question - how did you make the IDT for the GH2? I wonder what would be involved in doing this for other cameras.

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,993

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,368

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm102

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320

Tags in Topic

- aces 2