It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

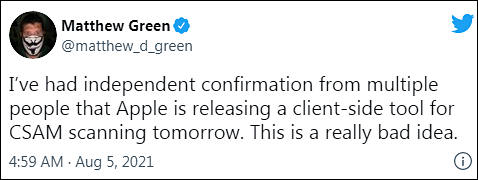

As reported by 9to5mac.com, Apple plans to soon announce a system for scanning photos stored in the smartphone's memory. The system is designed to detect images of child abuse (so they tell!). The solution is based on a fully closed black box like AI based hashing algorithm (aka “neuralMatch” system). At the start the neuralMatch system will be trained using a database from the National Center for Missing and Exploited Children and will be limited to iPhones in the United States. But both parts will change fast. System is planned to cover many categories of images and will be used worldwide in 2022 already.

Images will be scanned locally and without notifying or informing the owner of the smartphone, but also without transferring the full initial data to the cloud. During the scanning process, the system compares the photo on the phone with the data of the images of illegal content known to Apple. If there are coincidences with samples of incorrect images, according to the corporation, the system will presumably contact the administrators - low-paid residents of India or Bangladesh, who will carry out further work and decide how to act in your case. Among the actions, a complete instant phone lock is possible to prevent the possibility of deleting evidence.

The solution is based on hashing algorithms that are already used in the iCloud cloud storage when uploading photos. In general terms, the machine learning system that allows Apple Photos to recognize objects works in a similar way.

https://www.ft.com/content/14440f81-d405-452f-97e2-a81458f5411f

It is clear that Apple want to underplay the coming feature, constantly referring to hashing and such. In reality such algorithm will be constantly evolving and will report and send your private photos for constantly widening number of reasons.

For reference - all blocking and internet censorship in Mordor (and in all other countries) also started from child abuse and pedophiles. As it is universal thing that is very hard to complain about.

Also interesting

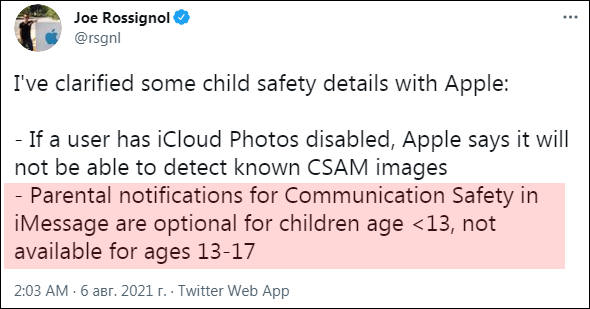

The update will be rolling out at a later date, along with several other child safety features, including new parental controls that can detect explicit photos in children's Messages.

It will be huge blow for 12-18 year olds who flirts and already have sex or petting, so they share such images with their partners. Now parents will be getting copy of each such thing. Creepy.

sa17919.jpg589 x 691 - 144K

sa17919.jpg589 x 691 - 144K -

This feature will come later to Max OS X also. I heard about it from talks with developers. And leaked info is only around 15% of all that it will do.

How about special AI core that will reside inside main LSI between your keyboard and OS, that will analyze everything that you type for the suspicious and criminal behavior?

-

The other feature scans all iMessage images sent or received by child accounts—that is, accounts designated as owned by a minor—for sexually explicit material, and if the child is young enough, notifies the parent when these images are sent or received. This feature can be turned on or off by parents.

New tool for abusive conservative parents, as now Apple will work as spy, reporting any naked body image to parents directly!

sa17925.jpg590 x 309 - 37K

sa17925.jpg590 x 309 - 37K -

New info starts to appear from developers and it tells that Apple software will also start collecting your internet search requests and possible data entered in forms.

In their AI algorithm decides that it is harmful it will be instantly reporting ALL your history of internet search to their 5 cents Indian admins and later to authorities. Nice thing. Apple.

-

The executive maintained that Apple was striking the right balance between child safety and privacy, and addressed some of the concerns that surfaced since the company announced its new measures in early August. He stressed that the scans of iCloud-destined photos would only flag existing images in a CSAM database, not any picture in your library. The system only sends an alert when you reach a threshold of 30 images, so false positives aren't likely.

The system also has "multiple levels of auditability," Federighi said. On-device scanning will reportedly make it easier for researchers to check if Apple ever misused the technology. Federighi also rejected the notion that the technique might be used to scan for other material, such as politics, noting that the database of images comes from multiple child safety groups, not just the agency that will receive any red-flag reports (the National Center for Missing and Exploited Children).

Apple came with new round of disinformation in their WSJ interview.

It is some reason why they need to install this system now and why they used always working pretense.

As all present Youtube and Facebook bans and blocking also started with.. fighting pedophiles and child abuse.

-

We are bearing witness to the construction of an all-seeing-i—an Eye of Improvidence—under whose aegis every iPhone will search itself for whatever Apple wants, or for whatever Apple is directed to want. They are inventing a world in which every product you purchase owes its highest loyalty to someone other than its owner.

To put it bluntly, this is not an innovation but a tragedy, a disaster-in-the-making.

-

Apple is delaying its child protection features announced last month, including a controversial feature that would scan users’ photos for child sexual abuse material (CSAM), following intense criticism that the changes could diminish user privacy.

“Last month we announced plans for features intended to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material. Based on feedback from customers, advocacy groups, researchers and others, we have decided to take additional time over the coming months to collect input and make improvements before releasing these critically important child safety features.”

Most probably feature will be rolled during Christmas time to avoid any negative media.

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,980

- Blog5,725

- General and News1,352

- Hacks and Patches1,152

- ↳ Top Settings33

- ↳ Beginners255

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,362

- ↳ Panasonic992

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm100

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras115

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,417

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,580

- ↳ Follow focus and gears93

- ↳ Sound498

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,319