It allows to keep PV going, with more focus towards AI, but keeping be one of the few truly independent places.

-

If you are wondering how much faster is my CPU-bound function in native code instead of Nitro JS, the answer is about 5x faster. This result is roughly consistent with the Benchmarks Game's results with x86/GCC/V8.

But a factor of 5 is okay on x86, because x86 is ten times faster than ARM just to start with. You have a lot of headroom. The solution is obviously just to make ARM 10x faster, so it is competitive with x86, and then we can get desktop JS performance without doing any work!

Whether or not this works out kind of hinges on your faith in Moore's Law in the face of trying to power a chip on a 3-ounce battery. I am not a hardware engineer, but I once worked for a major semiconductor company, and the people there tell me that these days performance is mostly a function of your process. The iPhone 5's impressive performance is due in no small part to a process shrink from 45nm to 32nm; a reduction of about a third. But to do it again, Apple would have to shrink to a 22nm process.

Just for reference, Intel's Bay Trail, the x86 Atom version of 22nm, doesn't currently exist. And Intel had to invent a whole new kind of transistor since the ordinary kind doesn't work at 22nm scale. Think they'll license it to ARM? Think again. There are only a handful of 22nm fabs that people are even seriously thinking about building in the world, and most of them are controlled by Intel. In fact, ARM seems on track to do a 28nm process shrink in the next year or so (watch the A7), and meanwhile Intel is on track to do 22nm and maybe even 20nm just a little further out. On purely a hardware level, it seems much more likely to me that an x86 chip with x86-class performance will be put in a smartphone long before an ARM chip with x86-class performance can be shrunk.

In fact, mobile CPUs is currently hitting the same type of limit that desktop CPUs hit when they reached ~3GHz : Increasing clock speed further is not feasible without increasing power a lot, same will be true for next process nodes although they should be able to increase IPC a bit (10-20% maybe). When they faced that limit, desktop CPUs started to become dual and quad cores, but mobile SoC are already dual and quad so there is no easy boost. So Moore's Law might be right after all, but it is right in a way that would require the entire mobile ecosystem to transition to x86.

Here is where a lot of competent software engineers stumble. The thought process goes like this - JavaScript has gotten faster! It will continue to get faster!

The first part is true. JavaScript has gotten a lot faster. But we are now at Peak JavaScript. It doesn't get much faster from here. Why? Well the first part is that most of the improvements to JavaScript over its history have actually been of the hardware sort.

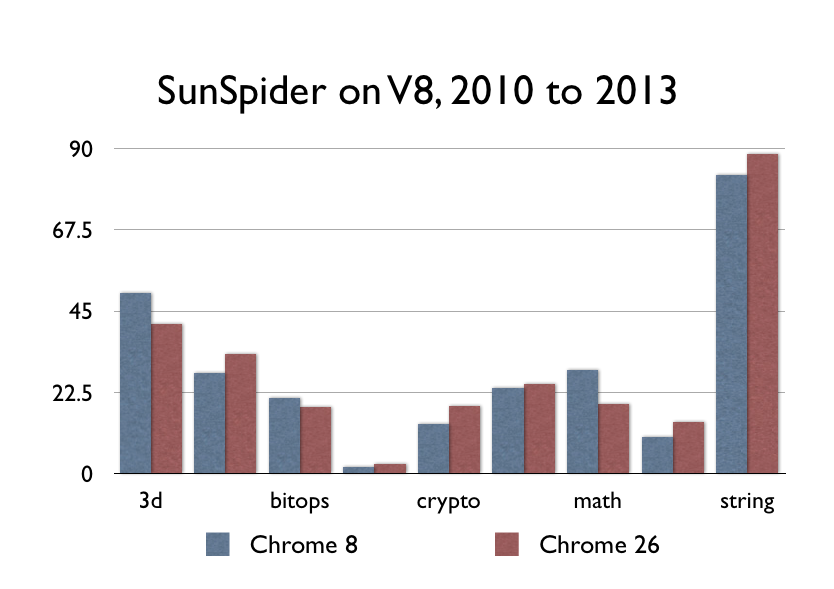

What about JITs though? V8, Nitro/SFX, TraceMonkey/IonMonkey, Chakra, and the rest? Well, they were kind of a big deal when they came out, although not as big of a deal as you might think. V8 was released in September 2008. The performance between Chrome 8 and Chrome 26 is a flatline, because nothing terribly important has happened since 2008. The other browser vendors have caught up;some slower, some faster;but nobody has really improved the speed of actual CPU code since

Ream more at http://sealedabstract.com/rants/why-mobile-web-apps-are-slow/

Howdy, Stranger!

It looks like you're new here. If you want to get involved, click one of these buttons!

Categories

- Topics List23,992

- Blog5,725

- General and News1,354

- Hacks and Patches1,153

- ↳ Top Settings33

- ↳ Beginners256

- ↳ Archives402

- ↳ Hacks News and Development56

- Cameras2,367

- ↳ Panasonic995

- ↳ Canon118

- ↳ Sony156

- ↳ Nikon96

- ↳ Pentax and Samsung70

- ↳ Olympus and Fujifilm101

- ↳ Compacts and Camcorders300

- ↳ Smartphones for video97

- ↳ Pro Video Cameras191

- ↳ BlackMagic and other raw cameras116

- Skill1,960

- ↳ Business and distribution66

- ↳ Preparation, scripts and legal38

- ↳ Art149

- ↳ Import, Convert, Exporting291

- ↳ Editors191

- ↳ Effects and stunts115

- ↳ Color grading197

- ↳ Sound and Music280

- ↳ Lighting96

- ↳ Software and storage tips266

- Gear5,420

- ↳ Filters, Adapters, Matte boxes344

- ↳ Lenses1,582

- ↳ Follow focus and gears93

- ↳ Sound499

- ↳ Lighting gear314

- ↳ Camera movement230

- ↳ Gimbals and copters302

- ↳ Rigs and related stuff273

- ↳ Power solutions83

- ↳ Monitors and viewfinders340

- ↳ Tripods and fluid heads139

- ↳ Storage286

- ↳ Computers and studio gear560

- ↳ VR and 3D248

- Showcase1,859

- Marketplace2,834

- Offtopic1,320

Tags in Topic

- mobile 26

- javascript 1